垃圾分类

垃圾分为以下两类:

- 语义垃圾(semantic garbage): 有的被称作内存泄露 语义垃圾指的是从语法上可达(可以通过局部、全局变量引用得到)的对象,但从语义上来讲他们是垃圾,垃圾回收器对此无能为力。

- 语法垃圾(syntactic garbage): 语法垃圾是讲那些从语法上无法到达的对象,这些才是垃圾收集器主要的收集目标。

语法垃圾:

1

2

3

4

5

6

7

8

9

10

|

package main

func main() {

alloconheap()

}

func alloconheap() {

var a = make([]int, 10240)

println(a)

}

|

在 allocOnHeap 返回后, 堆上的 a 无法访问 便成为了语法垃圾.

设计理念

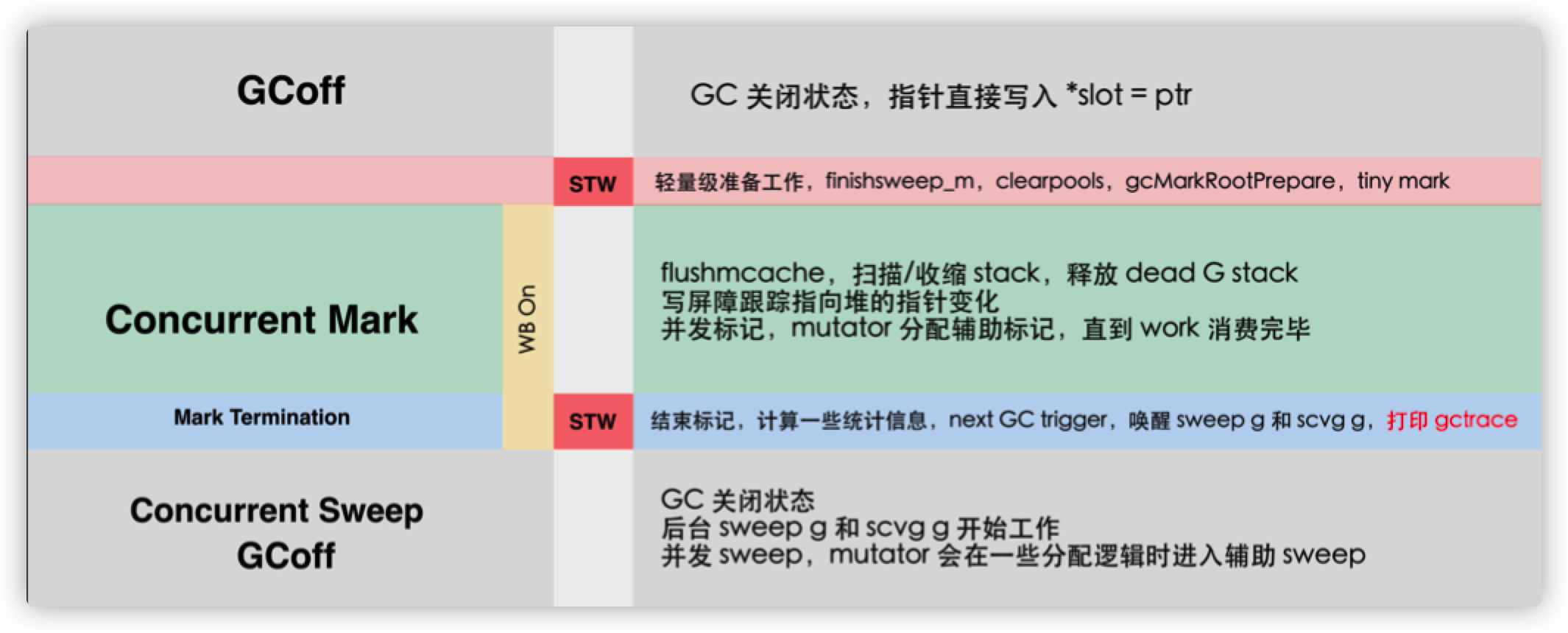

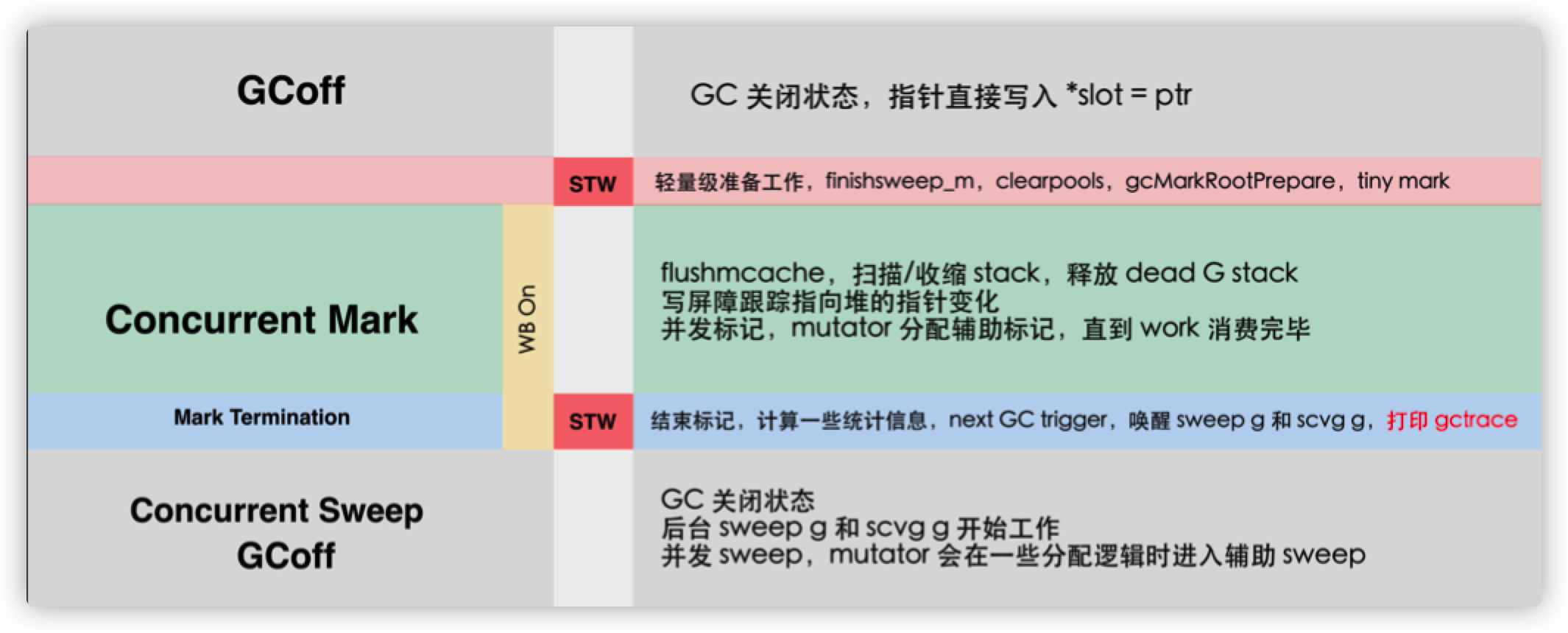

Go 实现的垃圾回收器是无分代(对象没有代际之分)、不整理(回收过程中不对对象进行移动与整理)、并发(与用户代码并发执行)的三色标记清扫算法。 从宏观的角度来看,Go 运行时的垃圾回收器主要包含五个阶段:

- 清理终止阶段(STW):对上个垃圾回收阶段进行一些收尾工作(例如清理缓存池、停止调度器等等),启动写屏障;

- 暂停程序,所有的处理器在这时会进入安全点(Safe point);

- 如果当前垃圾收集循环是强制触发的,我们还需要处理还未被清理的内存管理单元;

- 将状态切换至

_GCmark、开启写屏障、

- 标记阶段(并发):与赋值器并发执行,写屏障处于开启状态;

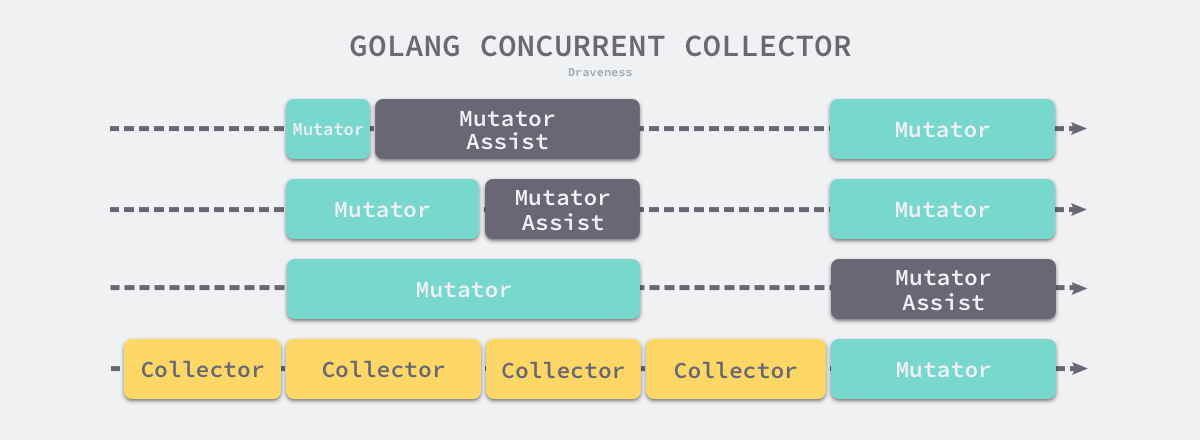

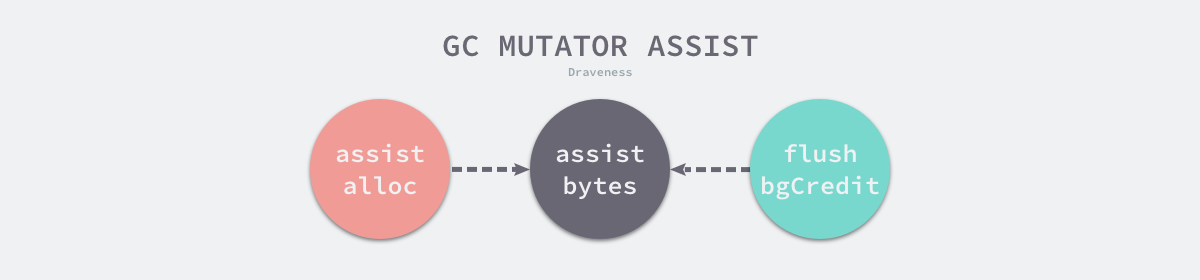

- 用户程序协助(Mutator Assist)并将根对象入队;

- 恢复执行程序,标记进程和用于协助的用户程序会开始并发标记内存中的对象,写屏障会将被覆盖的指针和新指针都标记成灰色,而所有新创建的对象都会被直接标记成黑色;

- 开始扫描根对象,包括所有 Goroutine 的栈、全局对象以及不在堆中的运行时数据结构,扫描 Goroutine 栈期间会暂停当前处理器;

- 依次处理灰色队列中的对象,将对象标记成黑色并将它们指向的对象标记成灰色;

- 使用分布式的终止算法检查剩余的工作,发现标记阶段完成后进入标记终止阶段;

- 标记终止阶段(STW):完成标记阶段的收尾工作,关闭写屏障,并随后对整个 GC 阶段进行的各项数据进行统计。

- 暂停程序、将状态切换至

_GCmarktermination 并关闭辅助标记的用户程序;

- 清理处理器上的线程缓存;

- 将状态切换至

_GCoff 开始清理阶段,初始化清理状态并关闭写屏障;

- 清理阶段(并发):将需要回收的内存归还到堆中,写屏障处于关闭状态;

- 恢复用户程序,所有新创建的对象会标记成白色;

- 后台并发清理所有的内存管理单元,当 Goroutine 申请新的内存管理单元时就会触发清理;

- 内存归还(并发):将过多的内存归还给操作系统,写屏障处于关闭状态

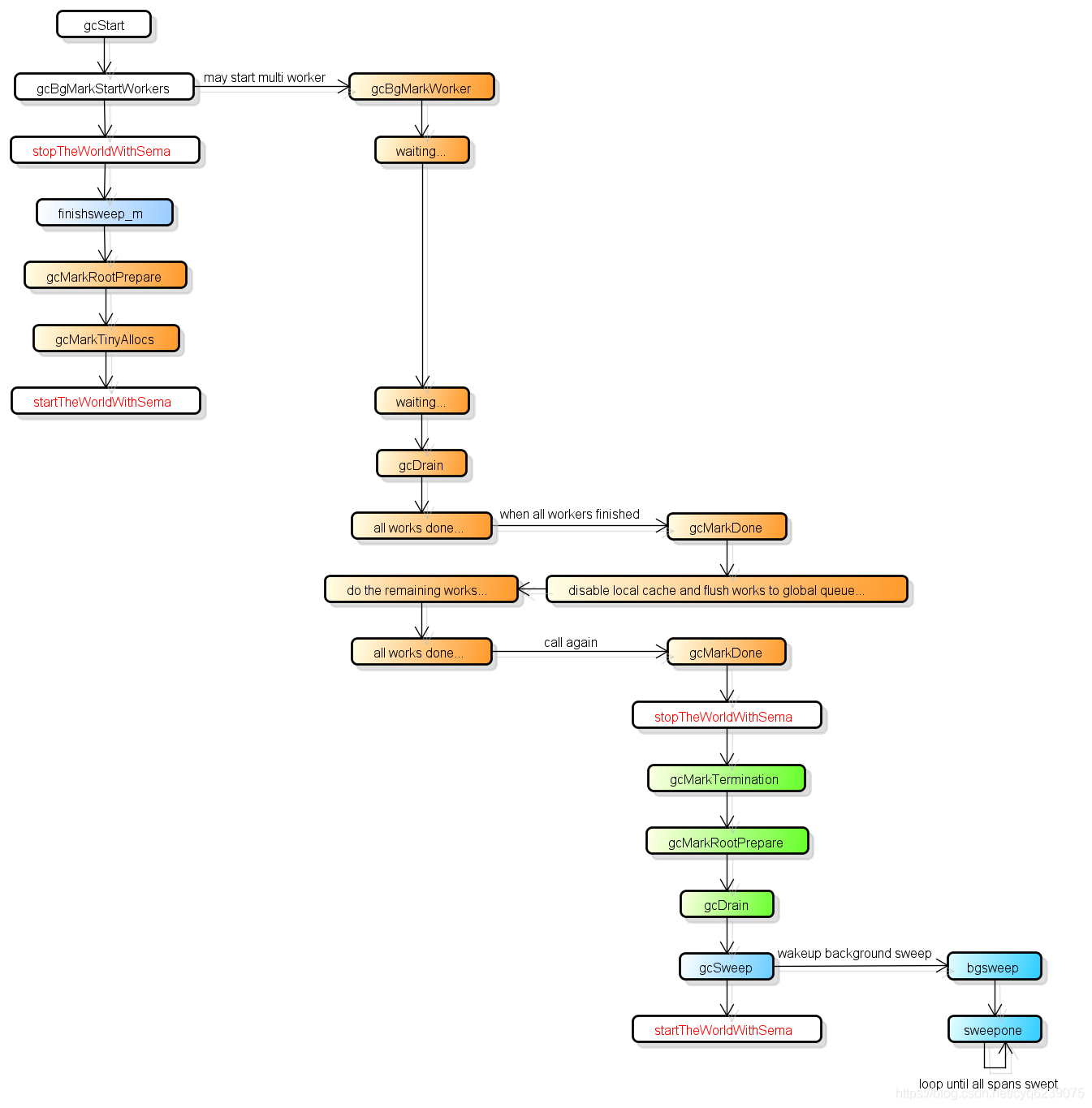

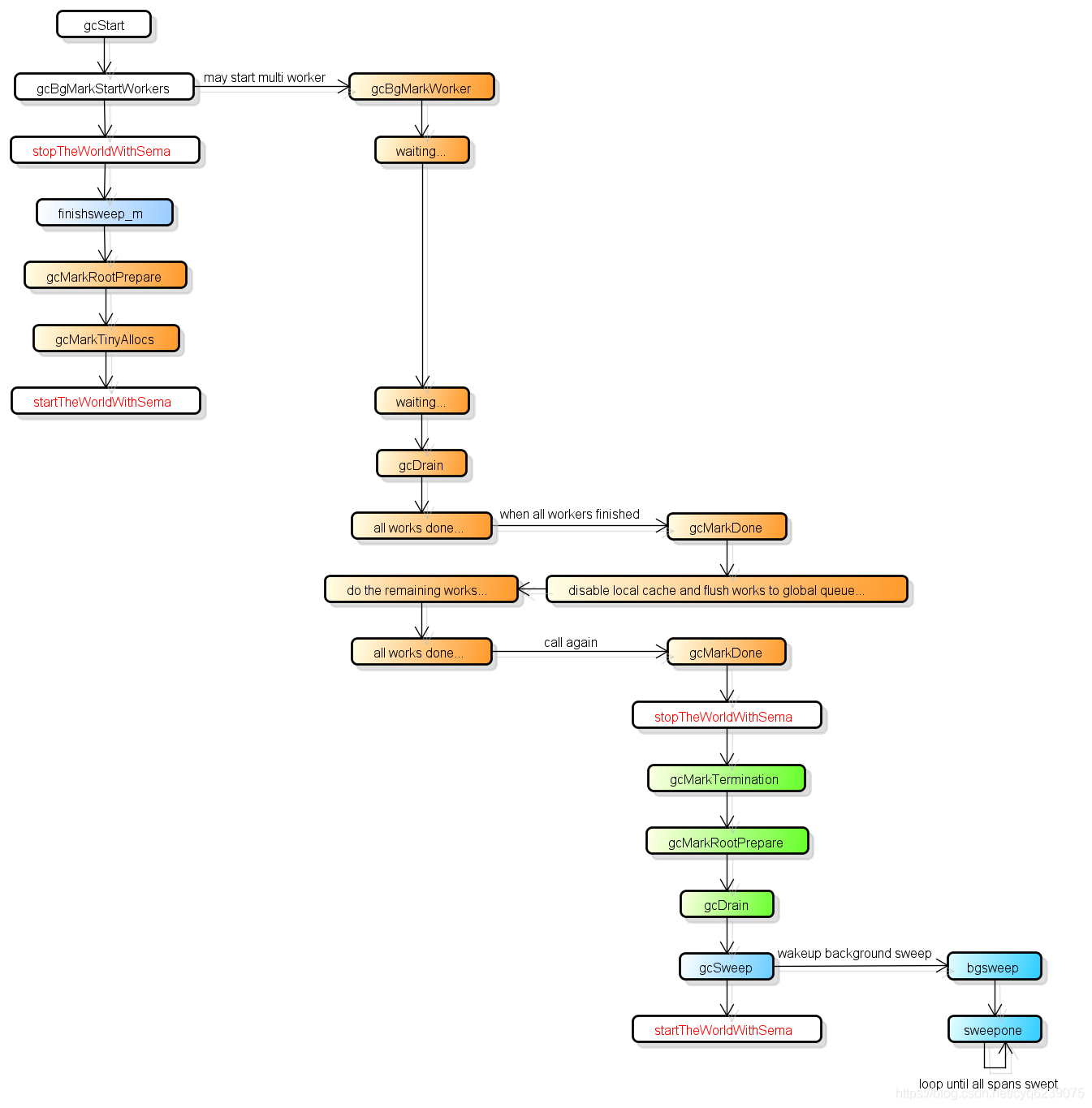

GC流程图:

对象整理的优势是解决内存碎片问题以及“允许”使用顺序内存分配器。 但 Go 运行时的分配算法基于 tcmalloc,基本上没有碎片问题。 并且顺序内存分配器在多线程的场景下并不适用。 Go 使用的是基于 tcmalloc 的现代内存分配算法,对对象进行整理不会带来实质性的性能提升。

分代 GC 依赖分代假设,即 GC 将主要的回收目标放在新创建的对象上(存活时间短,更倾向于被回收), 而非频繁检查所有对象。但 Go 的编译器会通过逃逸分析将大部分新生对象存储在栈上(栈直接被回收), 只有那些需要长期存在的对象才会被分配到需要进行垃圾回收的堆中。 也就是说,分代 GC 回收的那些存活时间短的对象在 Go 中是直接被分配到栈上, 当 goroutine 死亡后栈也会被直接回收,不需要 GC 的参与,进而分代假设并没有带来直接优势。 并且 Go 的垃圾回收器与用户代码并发执行,使得 STW 的时间与对象的代际、对象的 size 没有关系。 Go 团队更关注于如何更好地让 GC 与用户代码并发执行(使用适当的 CPU 来执行垃圾回收), 而非减少停顿时间这一单一目标上。

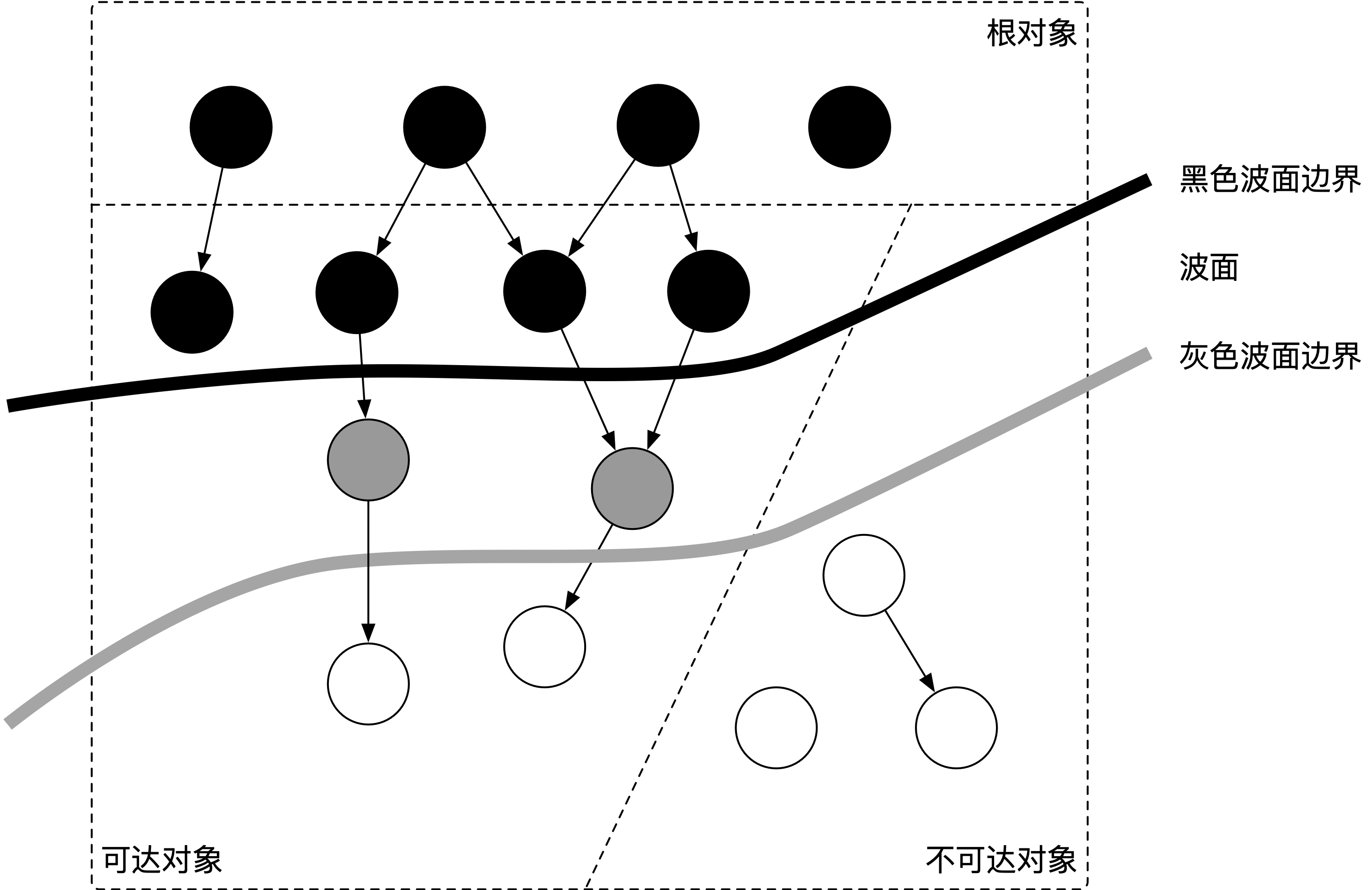

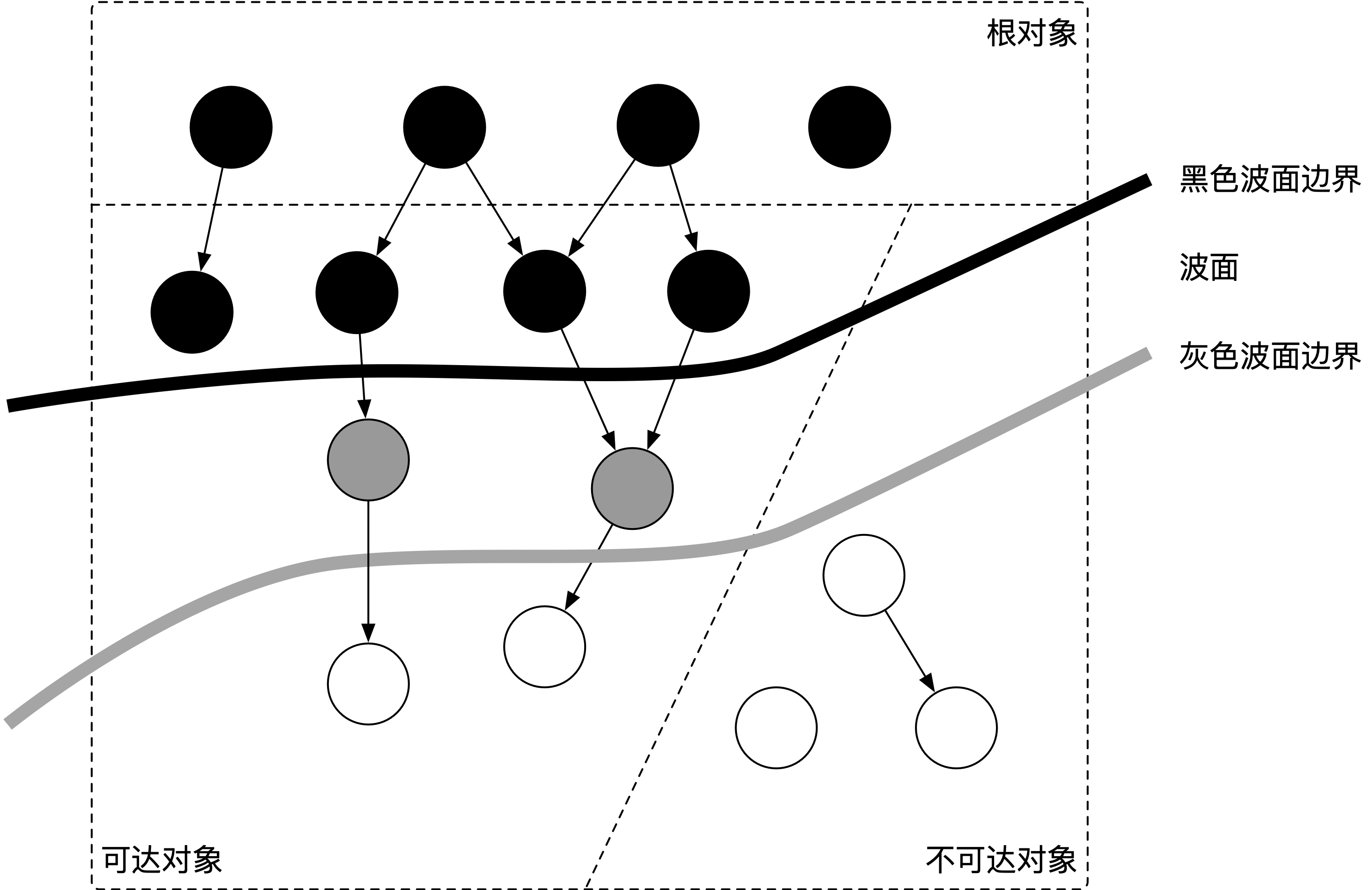

三色抽象

三色抽象只是一种描述追踪式回收器的方法,在实践中并没有实际含义, 它的重要作用在于从逻辑上严密推导标记清理这种垃圾回收方法的正确性。 也就是说,当我们谈及三色标记法时,通常指标记清扫的垃圾回收。

从垃圾回收器的视角来看,三色抽象规定了三种不同类型的对象,并用不同的颜色相称:

- 白色对象(可能死亡):未被回收器访问到的对象。在回收开始阶段,所有对象均为白色,当回收结束后,白色对象均不可达。

- 灰色对象(波面):已被回收器访问到的对象,但回收器需要对其中的一个或多个指针进行扫描,因为他们可能还指向白色对象。

- 黑色对象(确定存活):已被回收器访问到的对象,其中所有字段都已被扫描,黑色对象中任何一个指针都不可能直接指向白色对象。

这样三种不变性所定义的回收过程其实是一个 波面(Wavefront) 不断前进的过程, 这个波面同时也是黑色对象和白色对象的边界,灰色对象就是这个波面。

当垃圾回收开始时,只有白色对象。随着标记过程开始进行时,灰色对象开始出现(着色),这时候波面便开始扩大。当一个对象的所有子节点均完成扫描时,会被着色为黑色。当整个堆遍历完成时,只剩下黑色和白色对象,这时的黑色对象为可达对象,即存活;而白色对象为不可达对象,即死亡。这个过程可以视为以灰色对象为波面,将黑色对象和白色对象分离,使波面不断向前推进,直到所有可达的灰色对象都变为黑色对象为止的过程.

对象的三种颜色可以这样来判断:

1

2

3

4

5

6

7

8

9

|

func isWhite(ref interface{}) bool {

return !isMarked(ref)

}

func isGrey(ref interface{}) bool {

return worklist.Find(ref)

}

func isBlack(ref interface{}) bool {

return isMarked(ref) && !isGrey(ref)

}

|

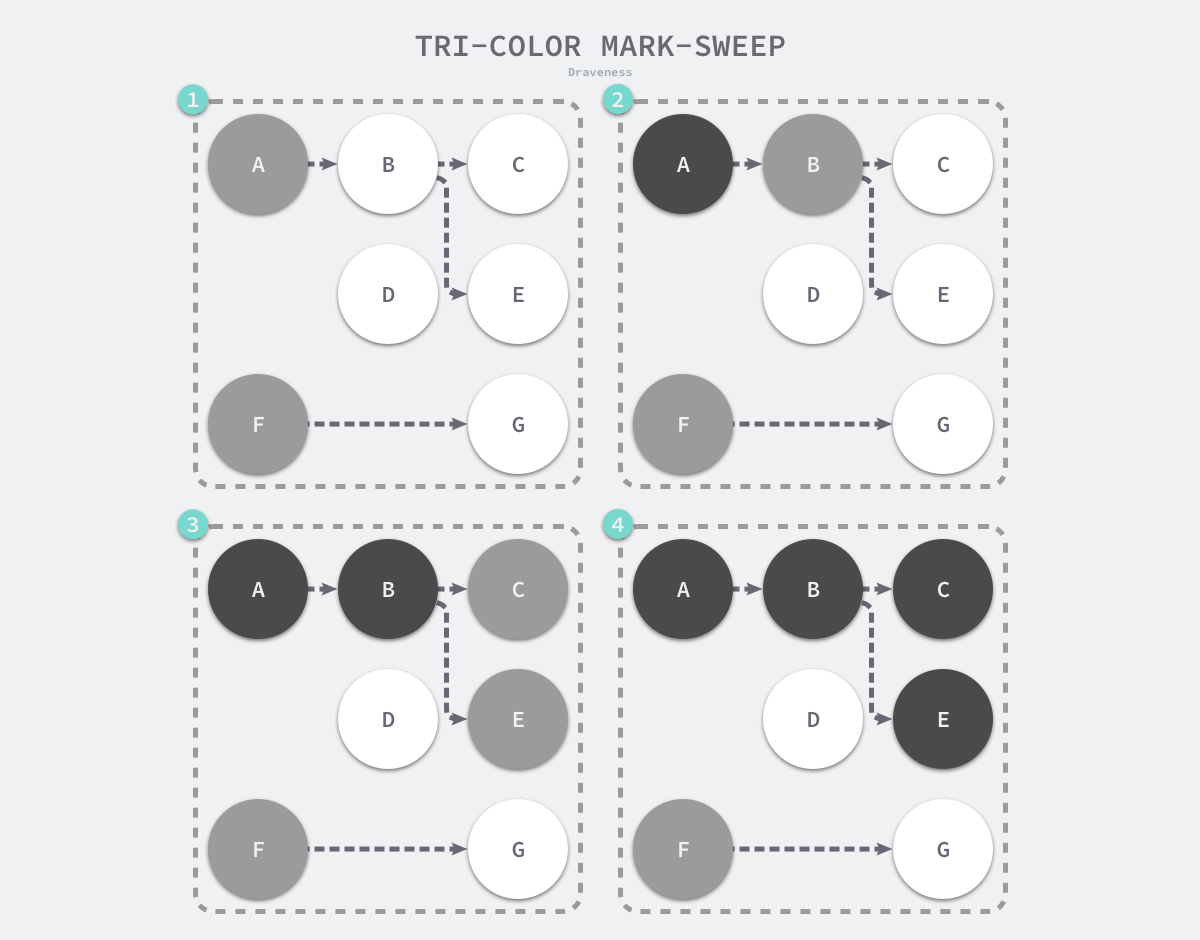

在垃圾收集器开始工作时,程序中不存在任何的黑色对象,垃圾收集的根对象会被标记成灰色,垃圾收集器只会从灰色对象集合中取出对象开始扫描,当灰色集合中不存在任何对象时,标记阶段就会结束。

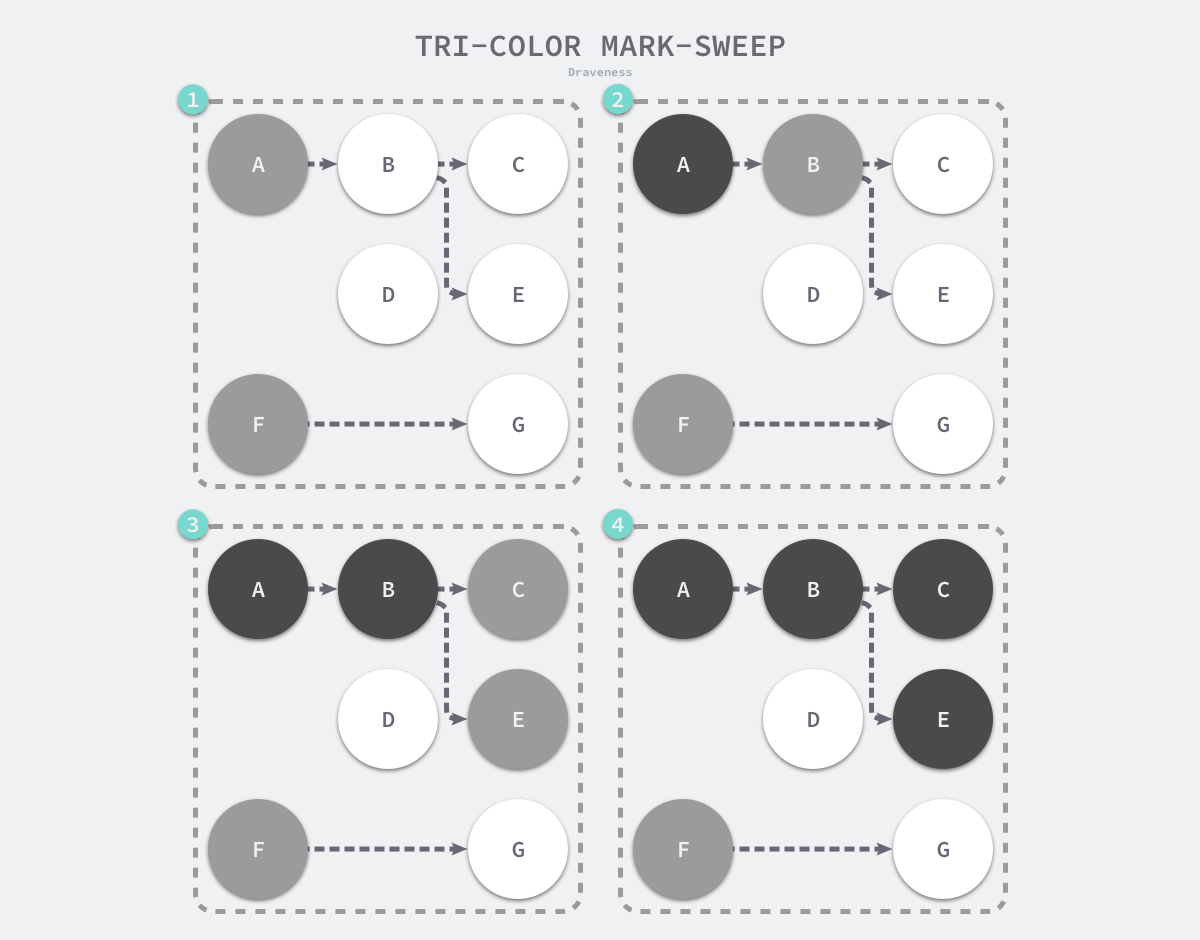

三色标记垃圾收集器的工作原理很简单,我们可以将其归纳成以下几个步骤:

- 从灰色对象的集合中选择一个灰色对象并将其标记成黑色;

- 将黑色对象指向的所有对象都标记成灰色,保证该对象和被该对象引用的对象都不会被回收;

- 重复上述两个步骤直到对象图中不存在灰色对象;

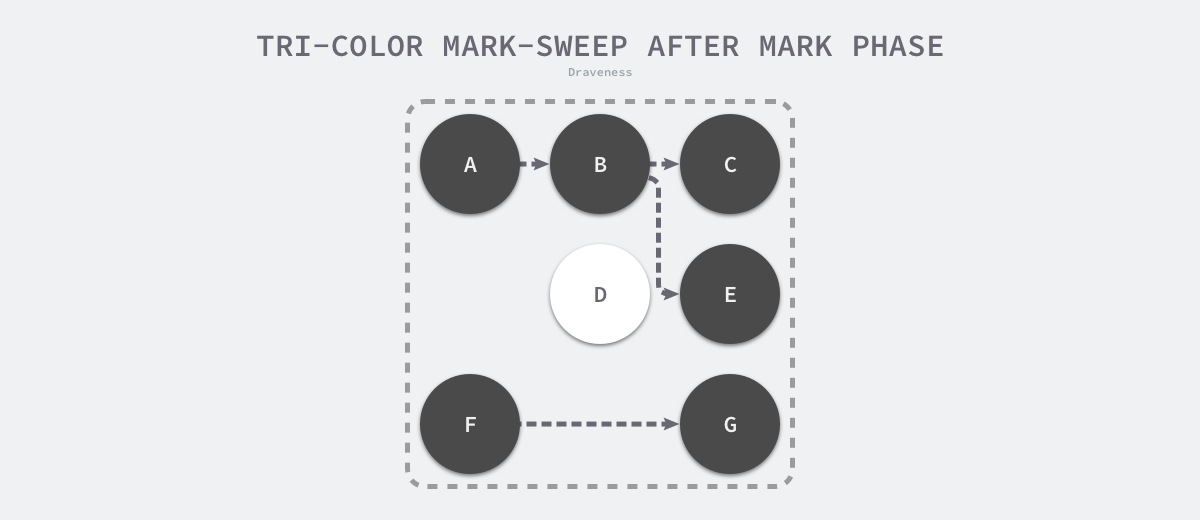

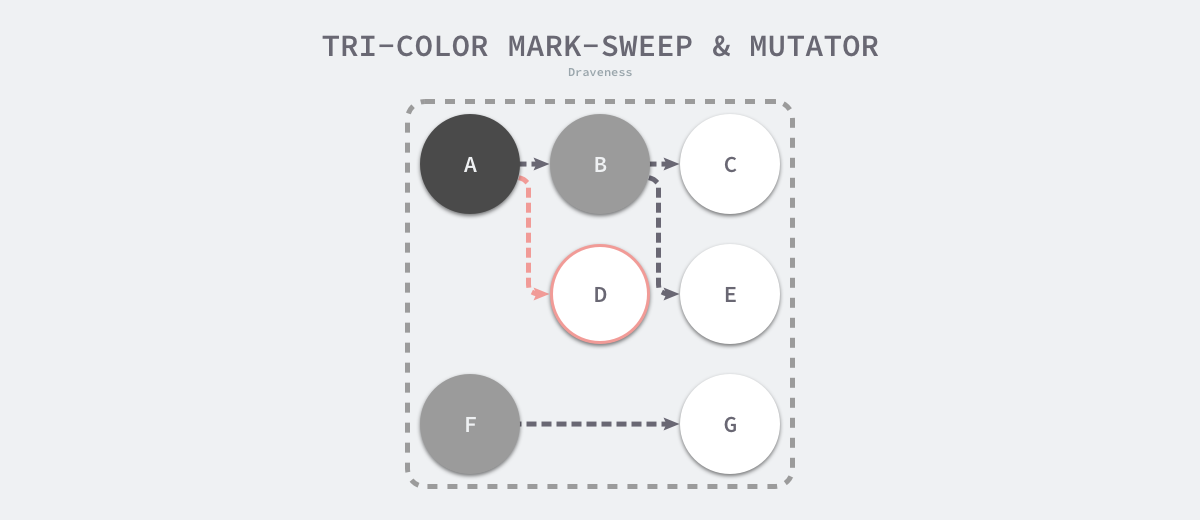

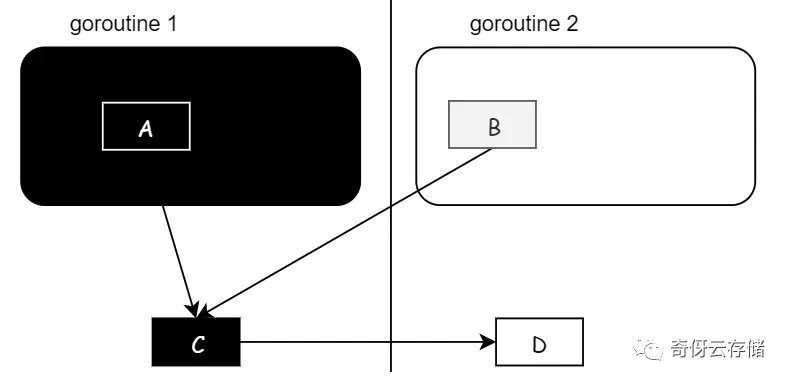

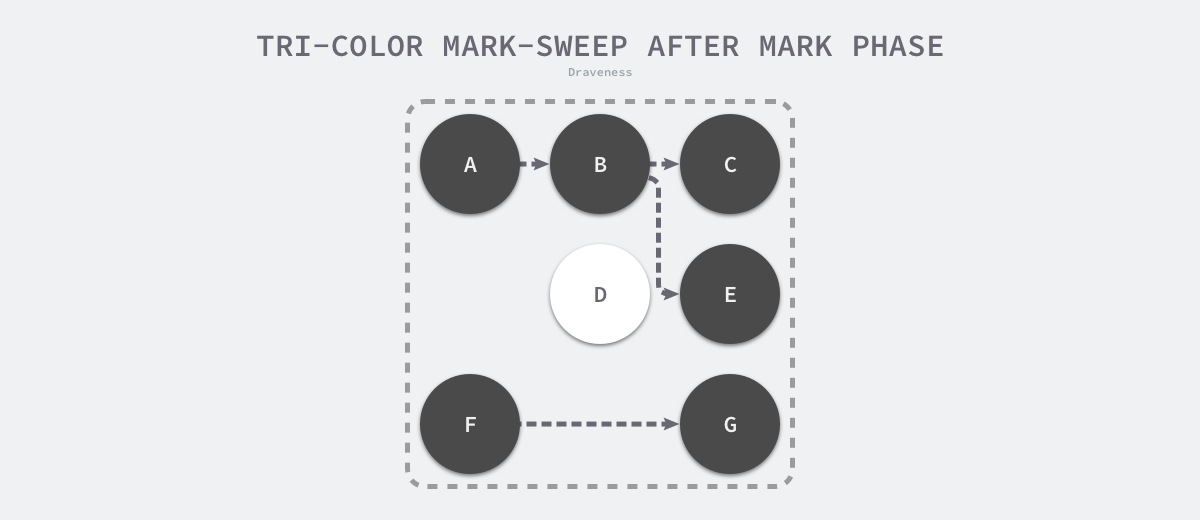

当三色的标记清除的标记阶段结束之后,应用程序的堆中就不存在任何的灰色对象,我们只能看到黑色的存活对象以及白色的垃圾对象,垃圾收集器可以回收这些白色的垃圾,下面是使用三色标记垃圾收集器执行标记后的堆内存,堆中只有对象 D 为待回收的垃圾:

因为用户程序可能在标记执行的过程中修改对象的指针,所以三色标记清除算法本身是不可以并发或者增量执行的,它仍然需要 STW,在如下所示的三色标记过程中,用户程序建立了从 A 对象到 D 对象的引用,但是因为程序中已经不存在灰色对象了,所以 D 对象会被垃圾收集器错误地回收。

本来不应该被回收的对象却被回收了,这在内存管理中是非常严重的错误,我们将这种错误称为悬挂指针,即指针没有指向特定类型的合法对象,影响了内存的安全性,想要并发或者增量地标记对象还是需要使用屏障技术。

有黑、灰、白三个集合,每种颜色的含义:

白色:对象未被标记,gcmarkBits对应的位为0

灰色:对象已被标记,但这个对象包含的子对象未标记,gcmarkBits对应的位为1

黑色:对象已被标记,且这个对象包含的子对象也已标记,gcmarkBits对应的位为1

灰色和黑色的gcmarkBits都是1,如何区分二者呢?

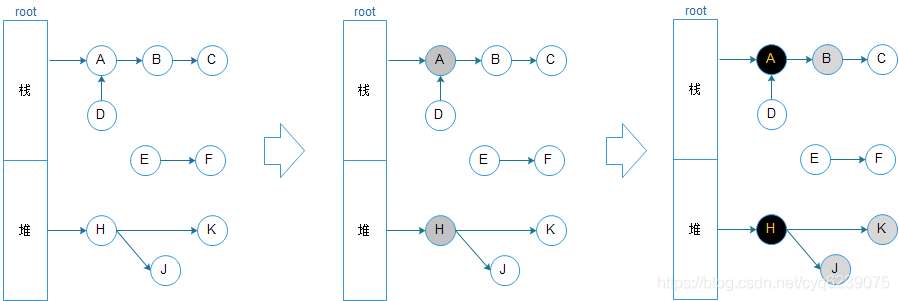

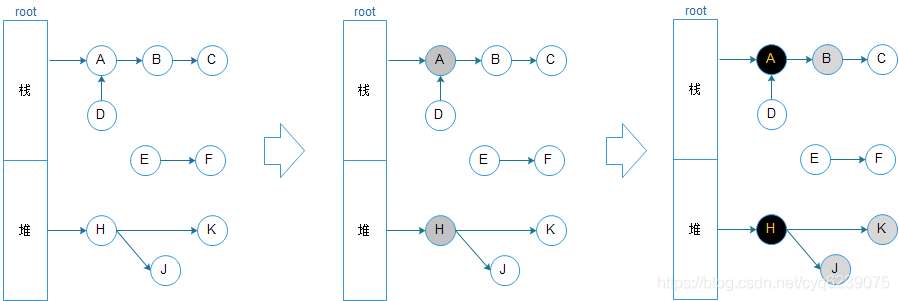

标记任务有标记队列,在标记队列中的是灰色,不在标记队里中的是黑色。标记过程见下图:

上图中根对象A是栈上分配的对象,H是堆中分配的全局变量,根对象A、H内部有分别引用了其他对象,而其他对象内部可能还引用其他对象,各个对象见的关系如上图所示。

- 初始状态下所有对象都是白色的。

- 接着开始扫描根对象,A、H是根对象所以被扫描到,A,H变为灰色对象。

- 接下来就开始扫描灰色对象,通过A到达B,B被标注灰色,A扫描结束后被标注黑色。同理J,K都被标注灰色,H被标注黑色。

- 继续扫描灰色对象,通过B到达C,C 被标注灰色,B被标注黑色,因为J,K没有引用对象,J,K标注黑色结束

- 最终,黑色的对象会被保留下来,白色对象D,E,F会被回收掉。

屏障技术

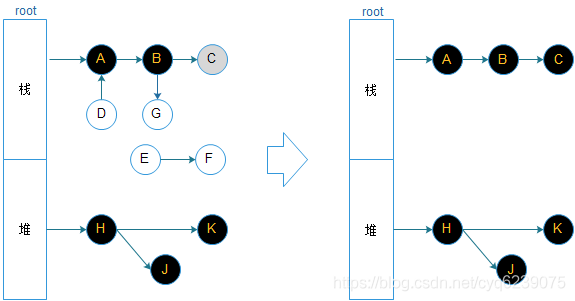

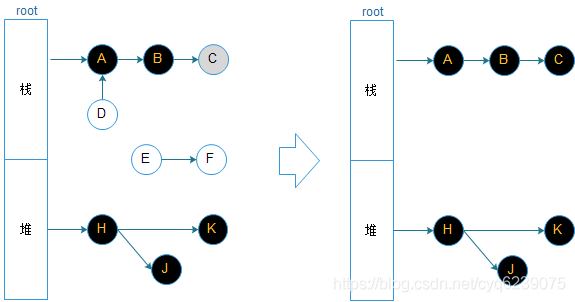

上图,假如B对象变黑后,又给B指向对象G,因为这个时候G对象已经扫描过了,所以G 对象还是白色,会被误回收。怎么解决这个问题呢?

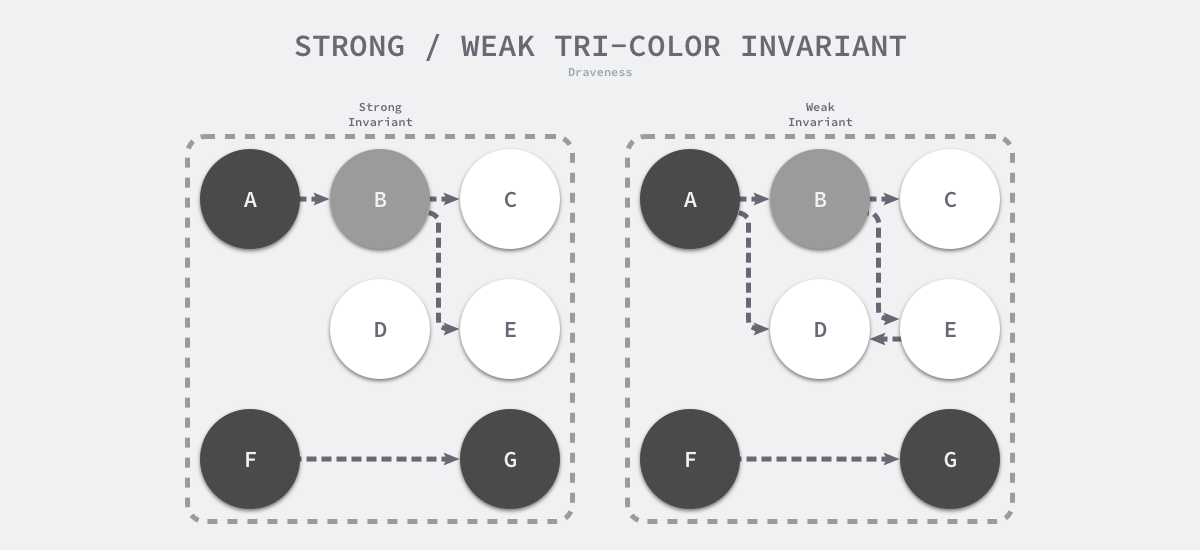

最简单的方法就是STW(stop the world)。也就是说,停止所有的协程。这个方法比较暴力会引起程序的卡顿,并不友好。让GC回收器,满足下面两种情况之一时,可保对象不丢失. 所以引出强-弱三色不变性:

内存屏障技术是一种屏障指令,它可以让 CPU 或者编译器在执行内存相关操作时遵循特定的约束,目前的多数的现代处理器都会乱序执行指令以最大化性能,但是该技术能够保证代码对内存操作的顺序性,在内存屏障前执行的操作一定会先于内存屏障后执行的操作。

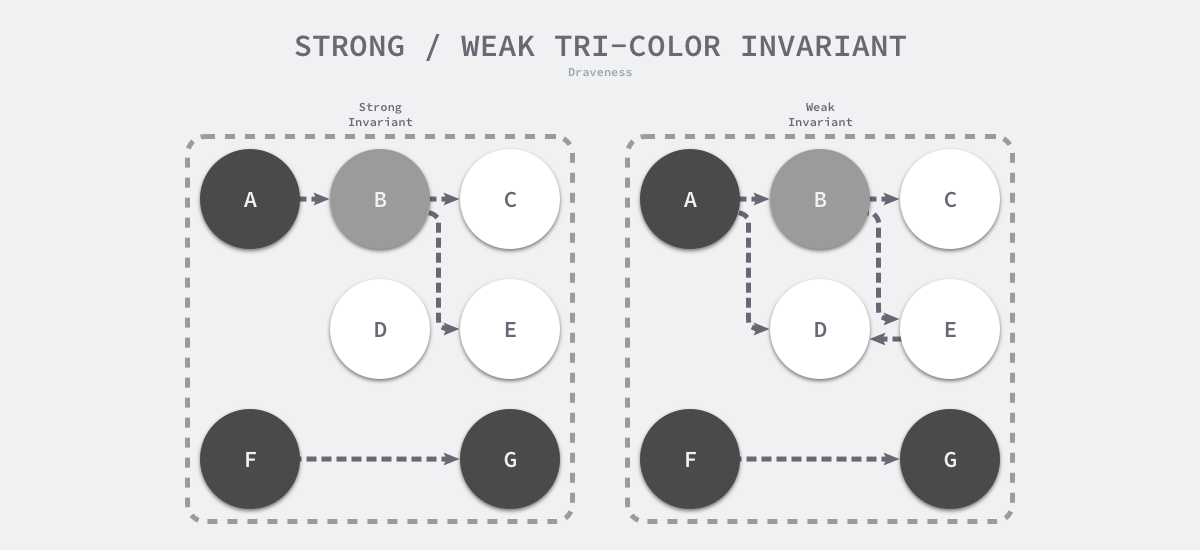

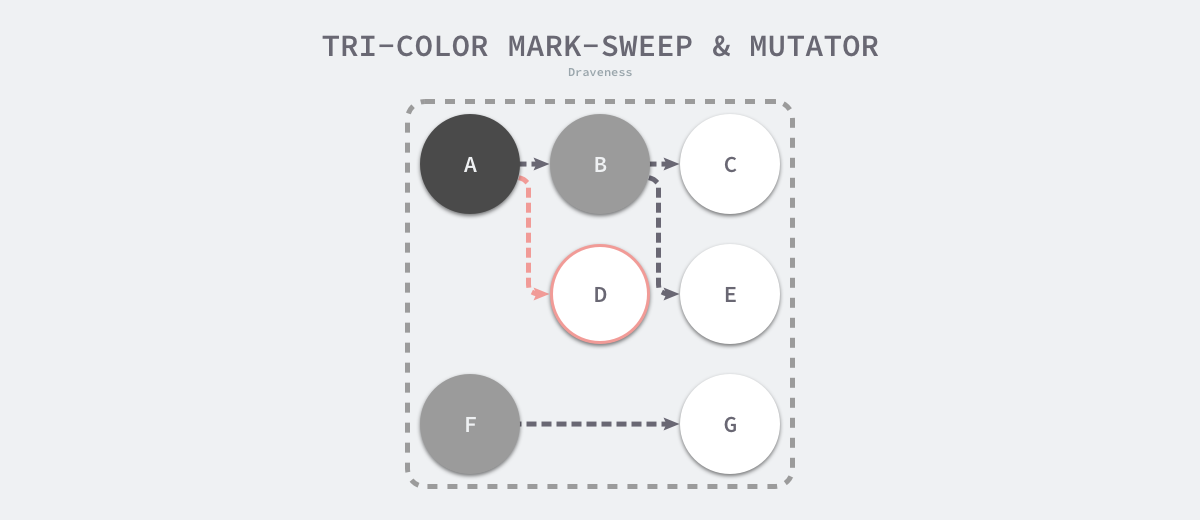

想要在并发或者增量的标记算法中保证正确性,我们需要达成以下两种三色不变性(Tri-color invariant)中的任意一种:

- 强三色不变性 — 黑色对象不会指向白色对象,只会指向灰色对象或者黑色对象;

- 弱三色不变性 — 黑色对象指向的白色对象必须包含一条从灰色对象经由多个白色对象的可达路径;

上图分别展示了遵循强三色不变性和弱三色不变性的堆内存,遵循上述两个不变性中的任意一个,我们都能保证垃圾收集算法的正确性,而屏障技术就是在并发或者增量标记过程中保证三色不变性的重要技术。

垃圾收集中的屏障技术更像是一个钩子方法,它是在用户程序读取对象、创建新对象以及更新对象指针时执行的一段代码,根据操作类型的不同,我们可以将它们分成读屏障(Read barrier)和写屏障(Write barrier)两种,因为读屏障需要在读操作中加入代码片段,对用户程序的性能影响很大,所以编程语言往往都会采用写屏障保证三色不变性。

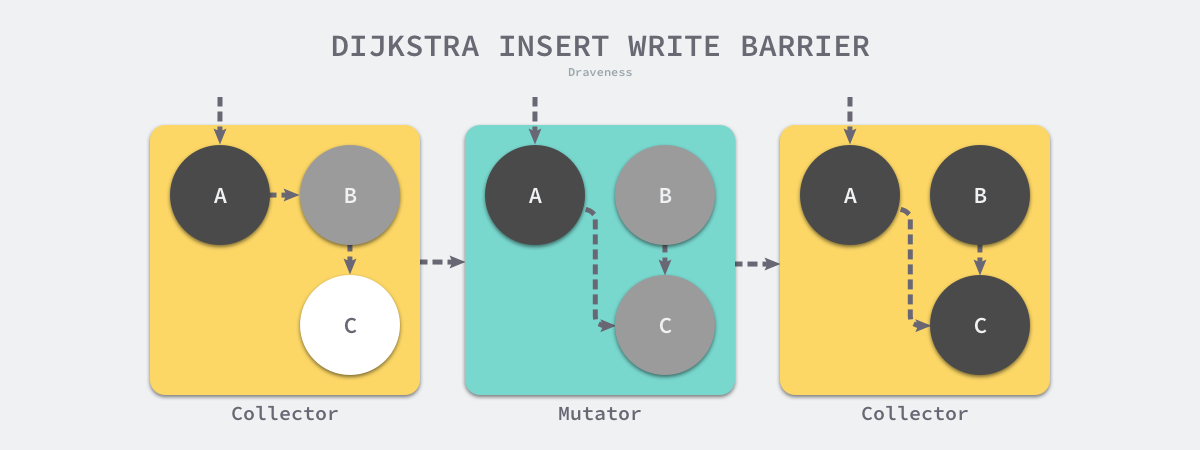

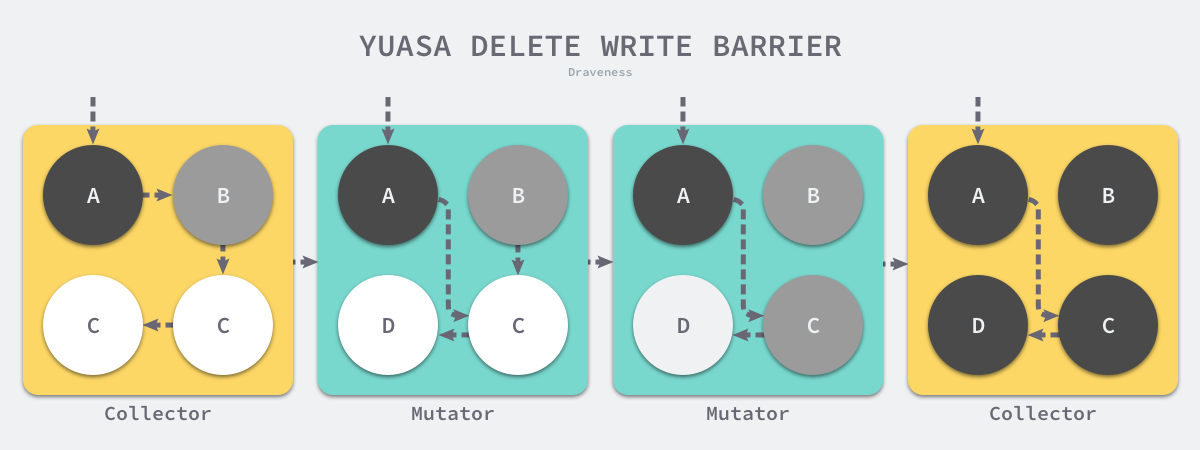

我们在这里想要介绍的是 Go 语言中使用的两种写屏障技术,分别是 Dijkstra 提出的插入写屏障和 Yuasa 提出的删除写屏障,这里会分析它们如何保证三色不变性和垃圾收集器的正确性。

插入写屏障

Dijkstra 在 1978 年提出了插入写屏障,通过如下所示的写屏障,用户程序和垃圾收集器可以在交替工作的情况下保证程序执行的正确性:

1

2

3

4

5

|

// 灰色赋值器 Dijkstra 插入屏障

func DijkstraWritePointer(slot *unsafe.Pointer, ptr unsafe.Pointer) {

shade(ptr)

*slot = ptr

}

|

- Slot 是 Go 代码里的被修改的指针对象

- Ptr 是 Slot 要修改成的值

上述插入写屏障的伪代码非常好理解,每当我们执行类似 *slot = ptr 的表达式时,我们会执行上述写屏障通过 shade 函数尝试改变指针的颜色。如果 ptr 指针是白色的,那么该函数会将该对象设置成灰色,其他情况则保持不变。

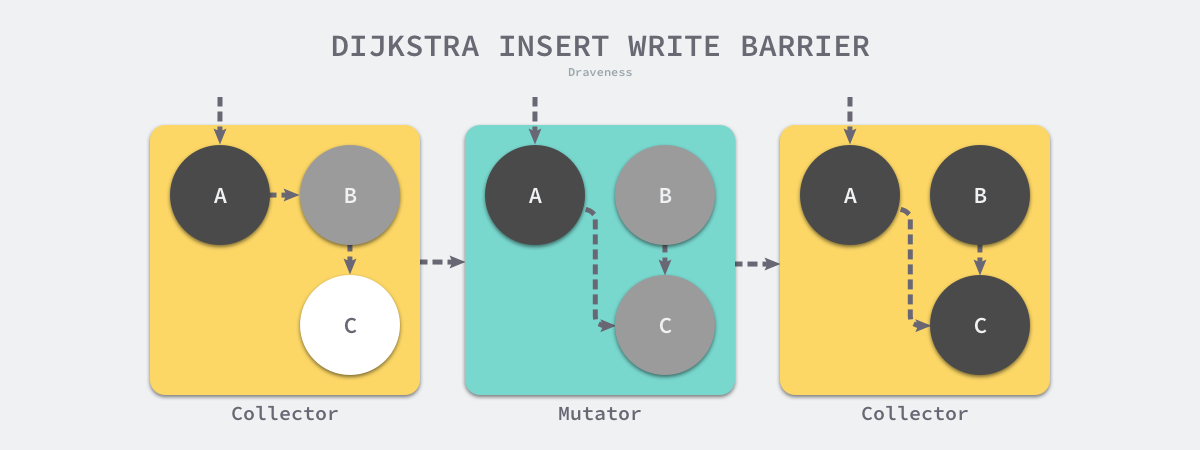

假设我们在应用程序中使用 Dijkstra 提出的插入写屏障,在一个垃圾收集器和用户程序交替运行的场景中会出现如上图所示的标记过程:

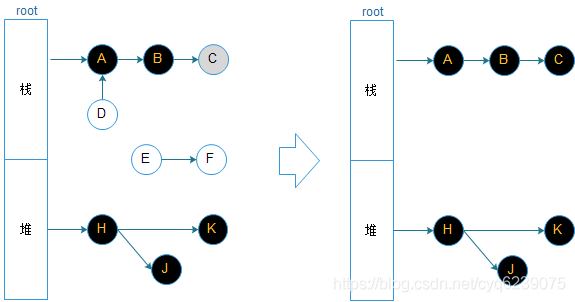

- 垃圾收集器将根对象指向 A 对象标记成黑色并将 A 对象指向的对象 B 标记成灰色;

- 用户程序修改 A 对象的指针,将原本指向 B 对象的指针指向 C 对象,这时触发写屏障将 C 对象标记成灰色;

- 垃圾收集器依次遍历程序中的其他灰色对象,将它们分别标记成黑色;

Dijkstra 的插入写屏障是一种相对保守的屏障技术,它会将有存活可能的对象都标记成灰色以满足强三色不变性。在如上所示的垃圾收集过程中,实际上不再存活的 B 对象最后没有被回收;而如果我们在第二和第三步之间将指向 C 对象的指针改回指向 B,垃圾收集器仍然认为 C 对象是存活的,这些被错误标记的垃圾对象只有在下一个循环才会被回收。

在 Go 语言 v1.7 版本之前,运行时会使用 Dijkstra 插入写屏障保证强三色不变性,但是运行时并没有在所有的垃圾收集根对象上开启插入写屏障。因为 Go 语言的应用程序可能包含成百上千的 Goroutine,而垃圾收集的根对象一般包括全局变量和栈对象,如果运行时需要在几百个 Goroutine 的栈上都开启写屏障,会带来巨大的额外开销,所以 Go 团队在实现上选择了在标记阶段完成时暂停程序、将所有栈对象标记为灰色并重新扫描,在活跃 Goroutine 非常多的程序中,重新扫描的过程需要占用 10 ~ 100ms 的时间。

插入式的 Dijkstra 写屏障虽然实现非常简单并且也能保证强三色不变性,但是它也有很明显的缺点。因为栈上的对象在垃圾收集中也会被认为是根对象,所以为了保证内存的安全,Dijkstra 在标记阶段完成重新对栈上的对象进行扫描,重新扫描栈对象时需要暂停程序,这个自然就会导致整个进程的赋值器卡顿,

删除写屏障

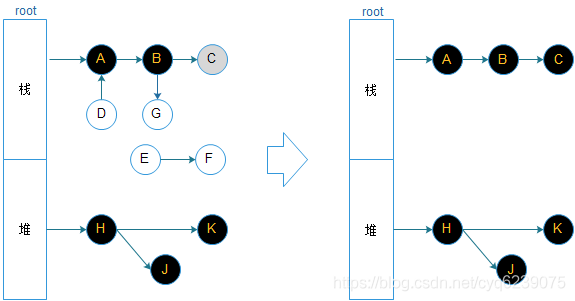

删除写屏障也叫基于快照的写屏障方案,必须在起始时,STW 扫描整个栈(注意了,是所有的 goroutine 栈),保证所有堆上在用的对象都处于灰色保护下,保证的是弱三色不变式;

该算法会使用如下所示的写屏障保证增量或者并发执行垃圾收集时程序的正确性:

1

2

3

4

5

|

// 黑色赋值器 Yuasa 屏障

func YuasaWritePointer(slot *unsafe.Pointer, ptr unsafe.Pointer) {

shade(*slot)

*slot = ptr

}

|

上述代码会在老对象的引用被删除时,将白色的老对象涂成灰色,这样删除写屏障就可以保证弱三色不变性,老对象引用的下游对象一定可以被灰色对象引用。

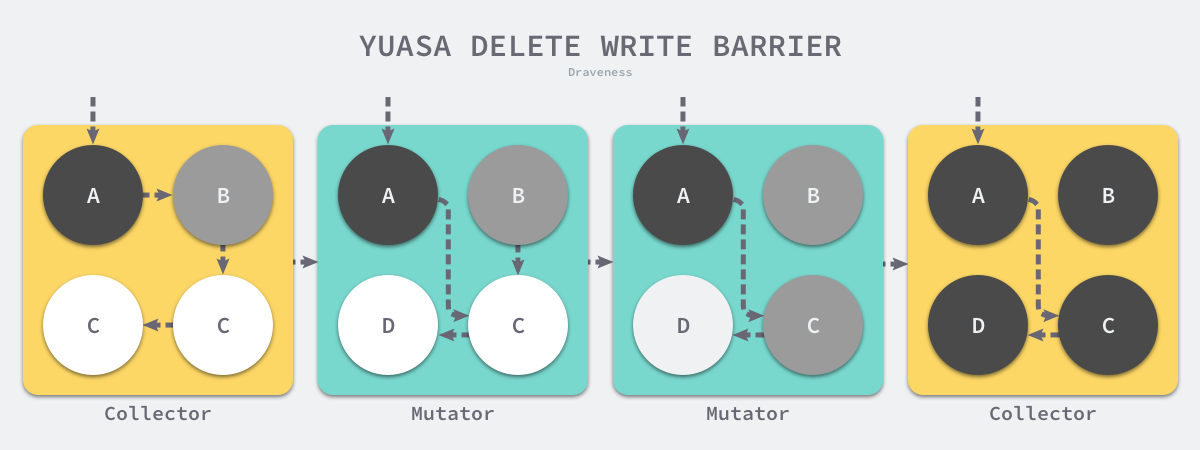

假设我们在应用程序中使用 Yuasa 提出的删除写屏障,在一个垃圾收集器和用户程序交替运行的场景中会出现如上图所示的标记过程:

- 垃圾收集器将根对象指向 A 对象标记成黑色并将 A 对象指向的对象 B 标记成灰色;

- 用户程序将 A 对象原本指向 B 的指针指向 C,触发删除写屏障,但是因为 B 对象已经是灰色的,所以不做改变;

- 用户程序将 B 对象原本指向 C 的指针删除,触发删除写屏障,白色的 C 对象被涂成灰色;

- 垃圾收集器依次遍历程序中的其他灰色对象,将它们分别标记成黑色;

上述过程中的第三步触发了 Yuasa 删除写屏障的着色,因为用户程序删除了 B 指向 C 对象的指针,所以 C 和 D 两个对象会分别违反强三色不变性和弱三色不变性:

- 强三色不变性 — 黑色的 A 对象直接指向白色的 C 对象;

- 弱三色不变性 — 垃圾收集器无法从某个灰色对象出发,经过几个连续的白色对象访问白色的 C 和 D 两个对象;

Yuasa 删除写屏障通过对 C 对象的着色,保证了 C 对象和下游的 D 对象能够在这一次垃圾收集的循环中存活,避免发生悬挂指针以保证用户程序的正确性。

由于起始快照的原因,起始也是执行 STW,删除写屏障不适用于栈特别大的场景,栈越大,STW 扫描时间越长,对于现代服务器上的程序来说,栈地址空间都很大,所以删除写屏障都不适用.

删除写屏障会导致扫描进度(波面)的后退,所以扫描精度不如插入写屏障.

写屏障对比

Dijkstra insertion barrier

优点:

- 能够保证堆上对象的强三色不变性(无栈对象参与时)

- 能防止指针从栈被隐藏进堆(因为堆上新建的连接都会被着色)

缺点:

- 不能防止栈上的黑色对象指向堆上的白色对象(这个白色对象之前是被堆上的黑/灰指着的),所以在mark结束后需要stw重新扫描所有 goroutine 栈

Yuasa deletion barrier

优点:

- 能够保证堆上的弱三色不变性(无栈对象参与时)

- 能防止指针从堆被隐藏进栈(因为堆上断开的连接都会被着色)

缺点:

- 不能防止堆上的黑色对象指向堆上的白色对象(这个白色对象之前是由栈的黑/灰色对象指着的),所以需要 GC 开始时 STW 对栈做快照

混合写屏障

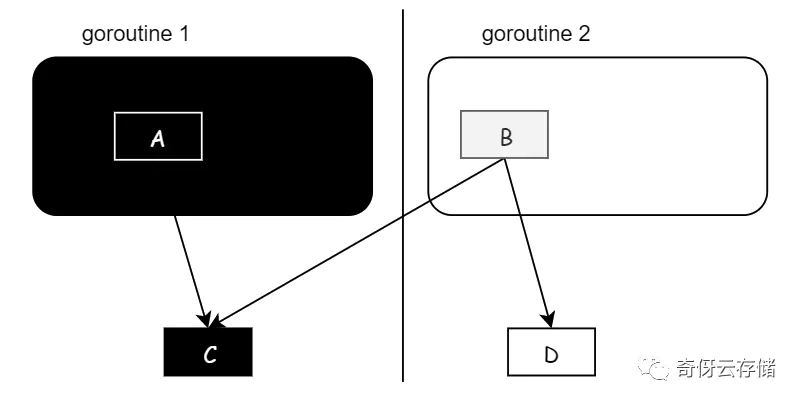

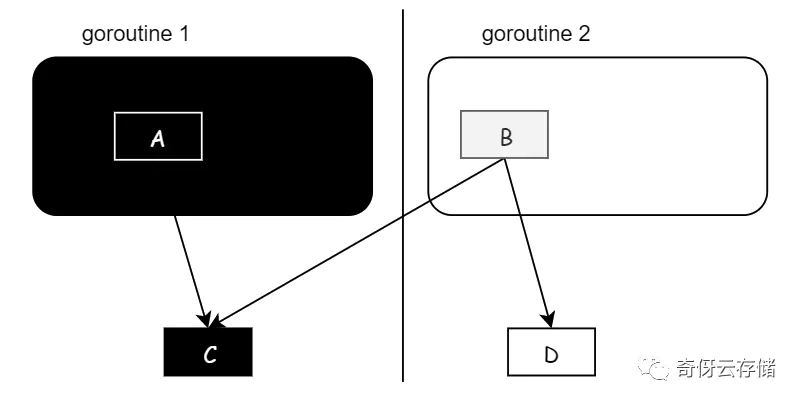

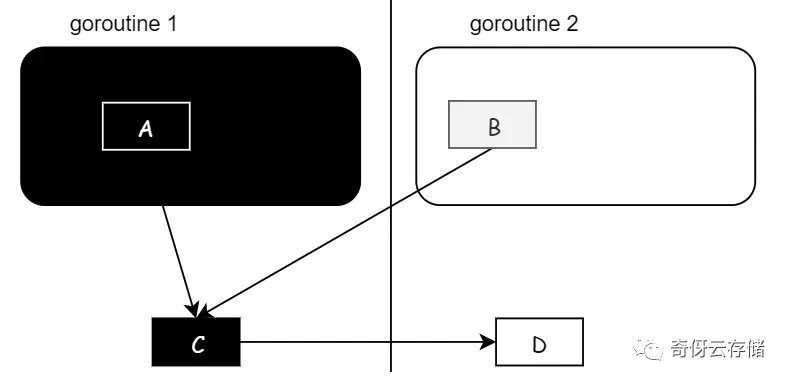

在删除写屏障下,如果我不 STW 所有的栈,而是一个栈一个栈的快照,有什么问题?举例:如果没有把栈完全扫黑,那么可能出现丢数据,如下:

初始状态:

- A 是 g1 栈的一个对象,g1栈已经扫描完了,并且 C 也是扫黑了的对象;

- B 是 g2 栈的对象,指向了 C 和 D,g2 完全还没扫描,B 是一个灰色对象,D 是白色对象;

步骤一:g2 进行赋值变更,把 C 指向 D 对象,这个时候黑色的 C 就指向了白色的 D(由于是删除屏障,这里是不会触发hook的)

步骤二:把 B 指向 C 的引用删除,由于是栈对象操作,不会触发删除写屏障;

步骤三:清理,因为 C 已经是黑色对象了,所以不会再扫描,所以 D 就会被错误的清理掉。

解决办法:加入插入写屏障的逻辑,C 指向 D 的时候,把 D 置灰,这样扫描也没问题。这样就能去掉起始 STW 扫描,从而可以并发,一个一个栈扫描。这就成了当前在用的混合写屏障了

Go 语言在 v1.8 组合 Dijkstra 插入写屏障和 Yuasa 删除写屏障构成了如下所示的混合写屏障,该写屏障会将被覆盖的对象标记成灰色并在当前栈没有扫描时将新对象也标记成灰色:

1

2

3

4

5

|

writePointer(slot, ptr):

shade(*slot)

if current stack is grey:

shade(ptr)

*slot = ptr

|

后来Go语言团队认为 stack check 成本太高,至少要进行一次atomic操作,实际上在Go语言是如此实现的:

1

2

3

4

|

writePointer(slot, ptr):

shade(*slot)

shade(ptr)

*slot = ptr

|

混合写屏障具体操作:

-

GC开始后逐一将栈上的对象全部扫描并标记为黑色(无需STW),

-

GC期间,任何在栈上创建的新对象,均为黑色。防止新分配的栈内存和堆内存中的对象被错误地回收,因为栈内存在标记阶段最终都会变为黑色,所以不再需要重新扫描栈空间。

-

被删除的对象标记为灰色。

-

被添加的对象标记为灰色。

满足: 变形的弱三色不变式.

采用混合屏障后可以大幅压缩1.7版本插入写屏障带来的的第二次STW的时间。

总结:

- 混合写屏障继承了插入写屏障的优点,起始无需 STW 打快照,直接并发扫描垃圾即可;

- 混合写屏障继承了删除写屏障的优点,消除了插入写屏障时期最后 STW 的重新扫描栈;

- 混合写屏障扫描栈虽然没有 STW,但是扫描某一个具体的栈的时候,还是要停止这个 goroutine 赋值器的工作.

增量和并发

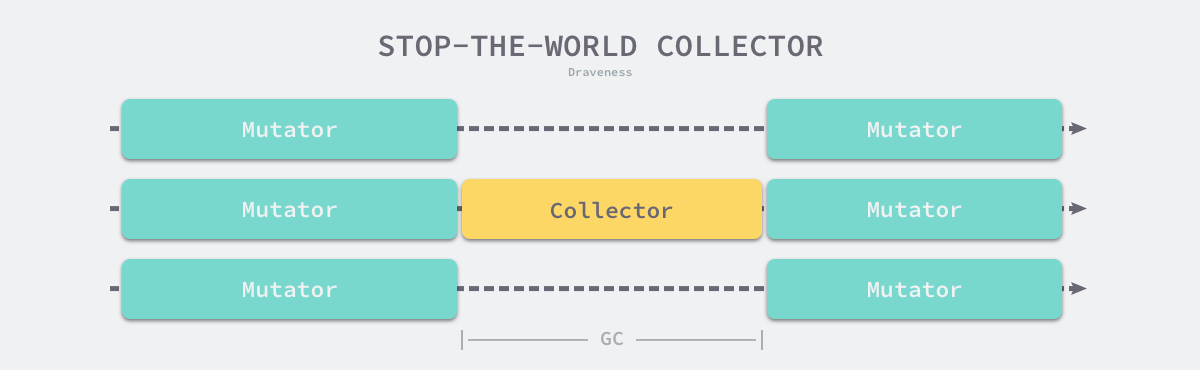

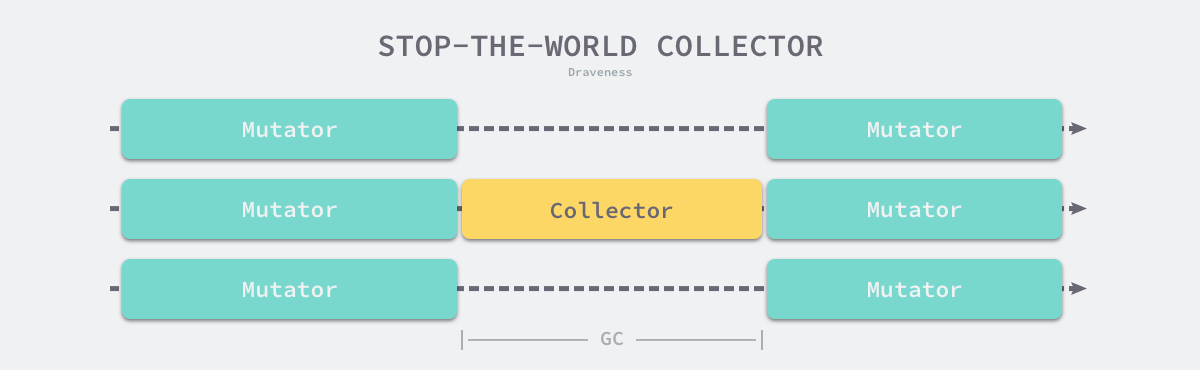

传统的垃圾收集算法会在垃圾收集的执行期间暂停应用程序,一旦触发垃圾收集,垃圾收集器就会抢占 CPU 的使用权占据大量的计算资源以完成标记和清除工作,然而很多追求实时的应用程序无法接受长时间的 STW。

远古时代的计算资源还没有今天这么丰富,今天的计算机往往都是多核的处理器,垃圾收集器一旦开始执行就会浪费大量的计算资源,为了减少应用程序暂停的最长时间和垃圾收集的总暂停时间,我们会使用下面的策略优化现代的垃圾收集器:

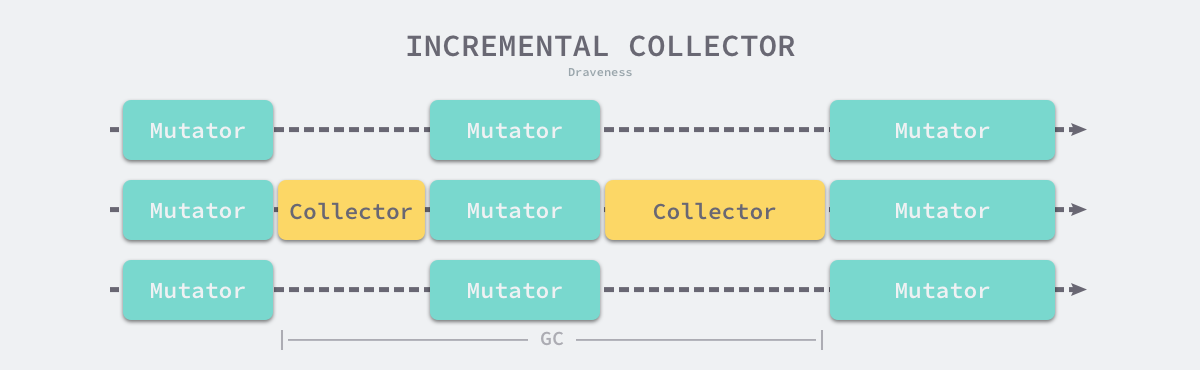

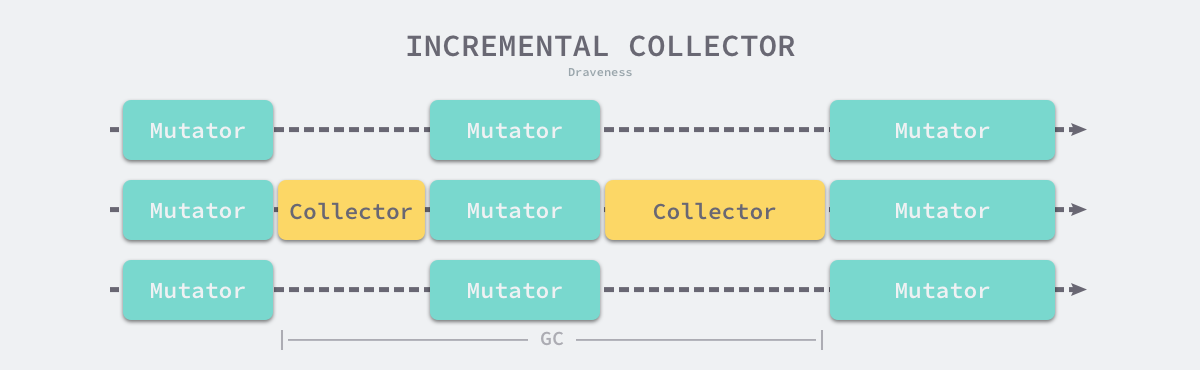

- 增量垃圾收集 — 增量地标记和清除垃圾,降低应用程序暂停的最长时间;

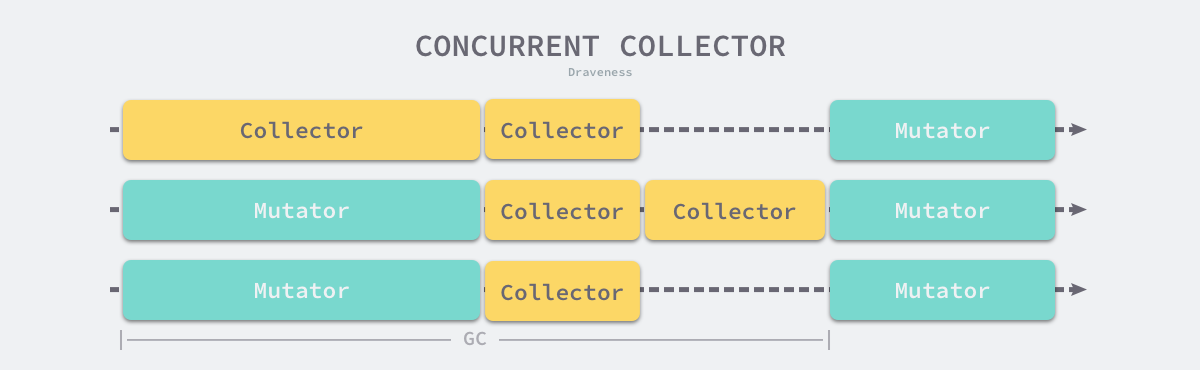

- 并发垃圾收集 — 利用多核的计算资源,在用户程序执行时并发标记和清除垃圾;

因为增量和并发两种方式都可以与用户程序交替运行,所以我们需要使用屏障技术保证垃圾收集的正确性;与此同时,应用程序也不能等到内存溢出时触发垃圾收集,因为当内存不足时,应用程序已经无法分配内存,这与直接暂停程序没有什么区别,增量和并发的垃圾收集需要提前触发并在内存不足前完成整个循环,避免程序的长时间暂停。

增量收集器

增量式(Incremental)的垃圾收集是减少程序最长暂停时间的一种方案,它可以将原本时间较长的暂停时间切分成多个更小的 GC 时间片,虽然从垃圾收集开始到结束的时间更长了,但是这也减少了应用程序暂停的最大时间:

需要注意的是,增量式的垃圾收集需要与三色标记法一起使用,为了保证垃圾收集的正确性,我们需要在垃圾收集开始前打开写屏障,这样用户程序对内存的修改都会先经过写屏障的处理,保证了堆内存中对象关系的强三色不变性或者弱三色不变性。虽然增量式的垃圾收集能够减少最大的程序暂停时间,但是增量式收集也会增加一次 GC 循环的总时间,在垃圾收集期间,因为写屏障的影响用户程序也需要承担额外的计算开销,所以增量式的垃圾收集也不是只有优点的。

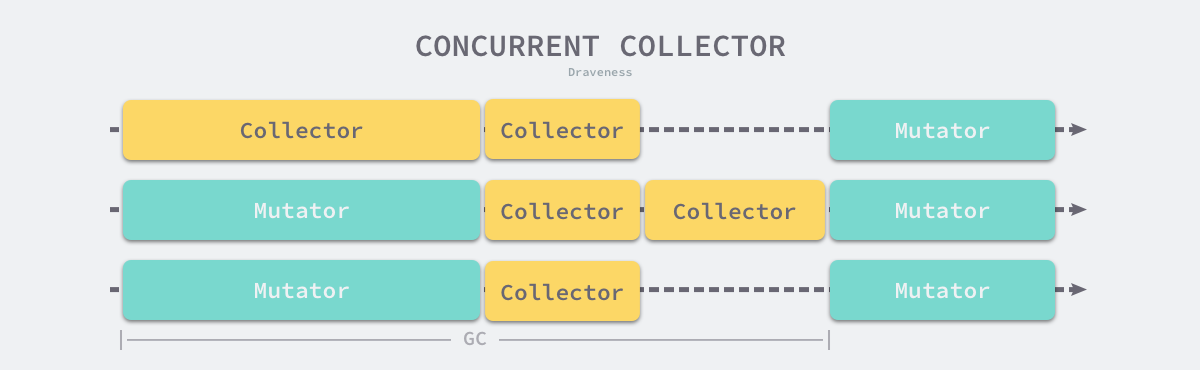

并发收集器

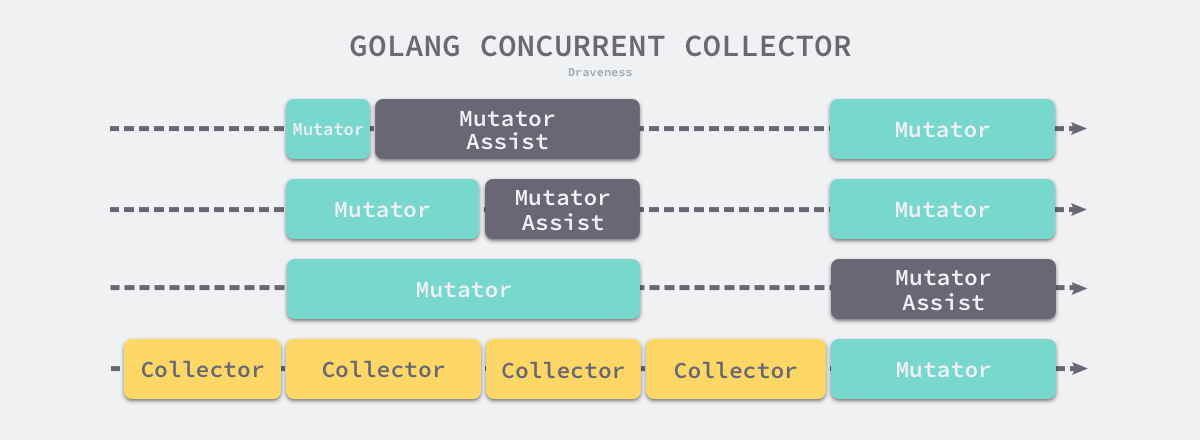

并发(Concurrent)的垃圾收集不仅能够减少程序的最长暂停时间,还能减少整个垃圾收集阶段的时间,通过开启读写屏障、利用多核优势与用户程序并行执行,并发垃圾收集器确实能够减少垃圾收集对应用程序的影响:

虽然并发收集器能够与用户程序一起运行,但是并不是所有阶段都可以与用户程序一起运行,部分阶段还是需要暂停用户程序的,不过与传统的算法相比,并发的垃圾收集可以将能够并发执行的工作尽量并发执行;当然,因为读写屏障的引入,并发的垃圾收集器也一定会带来额外开销,不仅会增加垃圾收集的总时间,还会影响用户程序,这是我们在设计垃圾收集策略时必须要注意的。

Go 语言在 v1.5 中引入了并发的垃圾收集器,该垃圾收集器使用了我们上面提到的三色抽象和写屏障技术保证垃圾收集器执行的正确性,如何实现并发的垃圾收集器在这里就不展开介绍了,我们来了解一些并发垃圾收集器的工作流程。

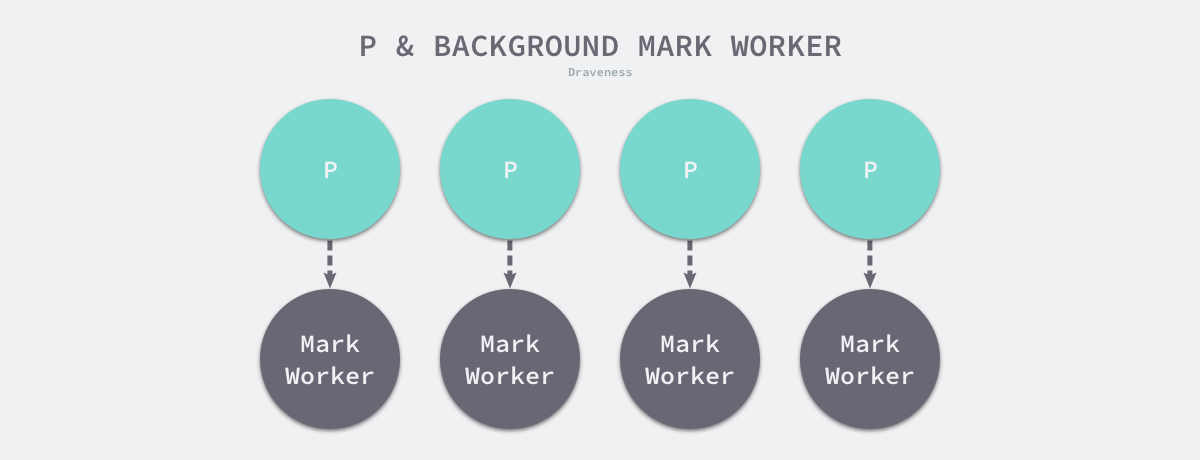

首先,并发垃圾收集器必须在合适的时间点触发垃圾收集循环,假设我们的 Go 语言程序运行在一台 4 核的物理机上,那么在垃圾收集开始后,收集器会占用 25% 计算资源在后台来扫描并标记内存中的对象:

Go 语言的并发垃圾收集器会在扫描对象之前暂停程序做一些标记对象的准备工作,其中包括启动后台标记的垃圾收集器以及开启写屏障,如果在后台执行的垃圾收集器不够快,应用程序申请内存的速度超过预期,运行时就会让申请内存的应用程序辅助完成垃圾收集的扫描阶段,在标记和标记终止阶段结束之后就会进入异步的清理阶段,将不用的内存增量回收。

v1.5 版本实现的并发垃圾收集策略由专门的 Goroutine 负责在处理器之间同步和协调垃圾收集的状态。当其他的 Goroutine 发现需要触发垃圾收集时,它们需要将该信息通知给负责修改状态的主 Goroutine,然而这个通知的过程会带来一定的延迟,这个延迟的时间窗口很可能是不可控的,用户程序会在这段时间分配界面很多内存空间。

v1.6 引入了去中心化的垃圾收集协调机制,将垃圾收集器变成一个显式的状态机,任意的 Goroutine 都可以调用方法触发状态的迁移,常见的状态迁移方法包括以下几个

- runtime.gcStart — 从

_GCoff 转换至_GCmark 阶段,进入并发标记阶段并打开写屏障;

- runtime.gcMarkDone — 如果所有可达对象都已经完成扫描,调用

runtime.gcMarkTermination;

- runtime.gcMarkTermination — 从

_GCmark 转换_GCmarktermination 阶段,进入标记终止阶段并在完成后进入 _GCoff;

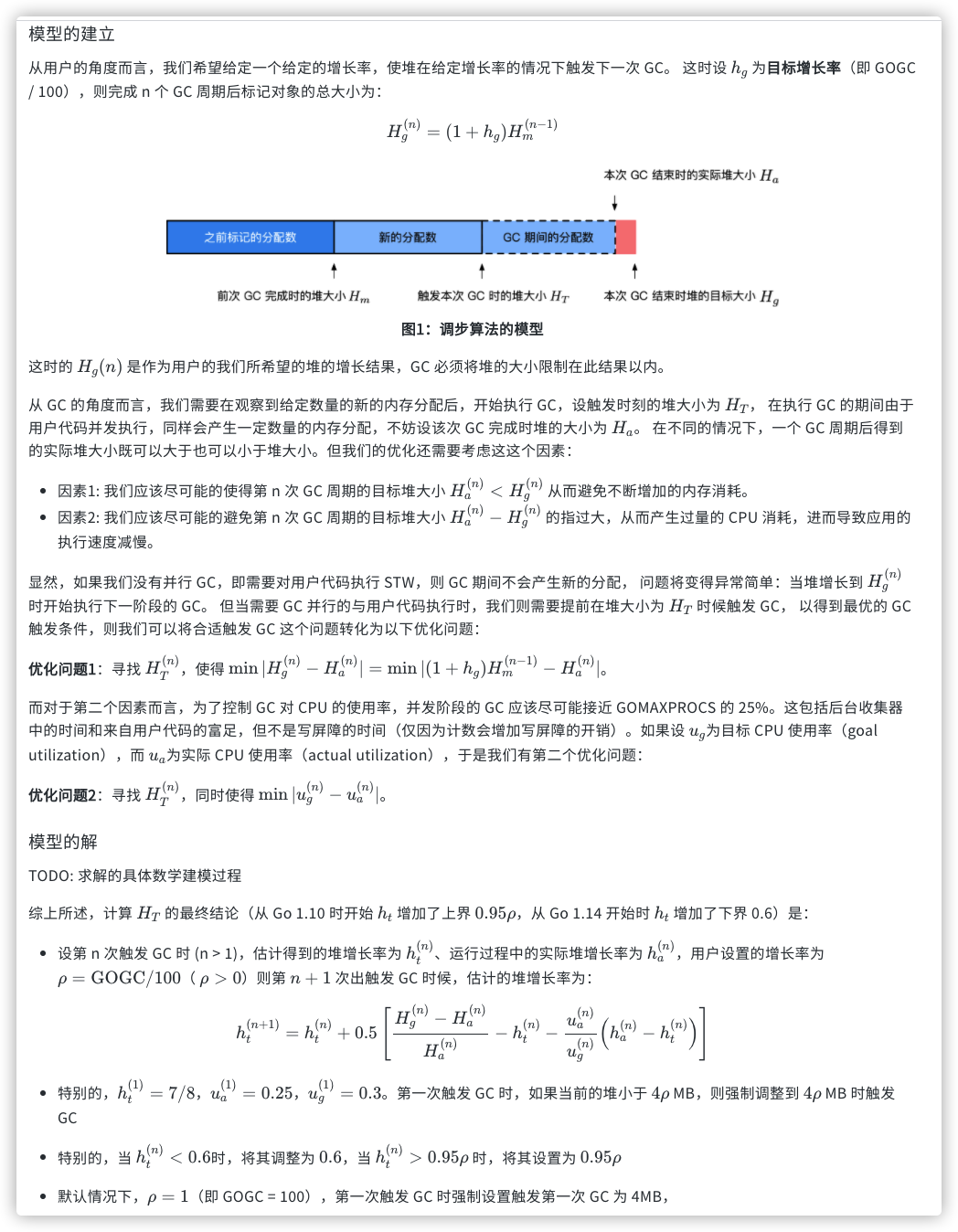

调步算法

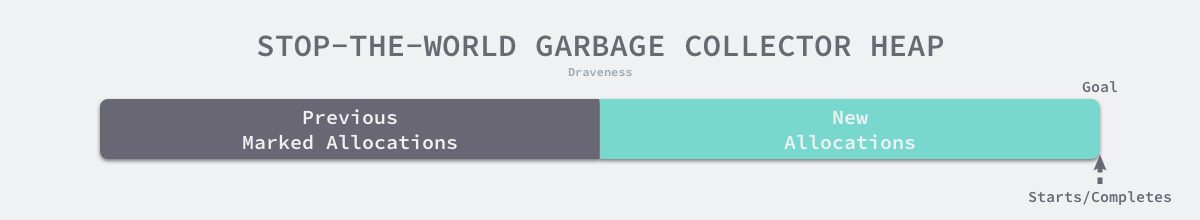

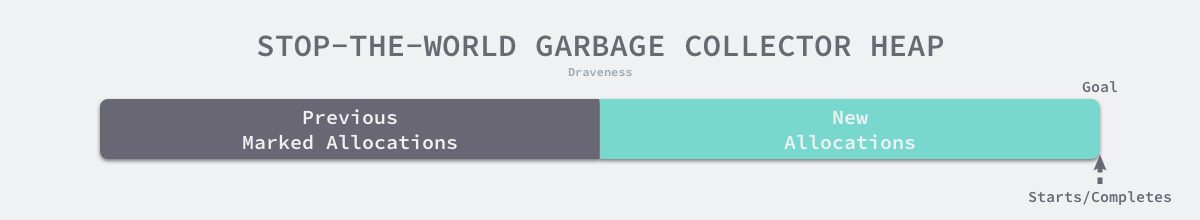

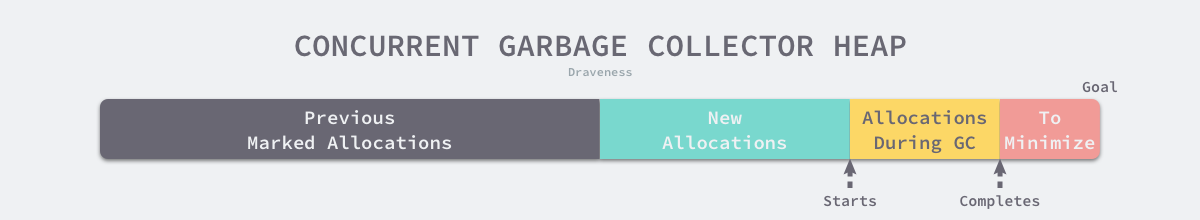

STW 的垃圾收集器虽然需要暂停程序,但是它能够有效地控制堆内存的大小,Go 语言运行时的默认配置会在堆内存达到上一次垃圾收集的 2 倍时,触发新一轮的垃圾收集,这个行为可以通过环境变量 GOGC 调整,在默认情况下它的值为 100,即增长 100% 的堆内存才会触发 GC。

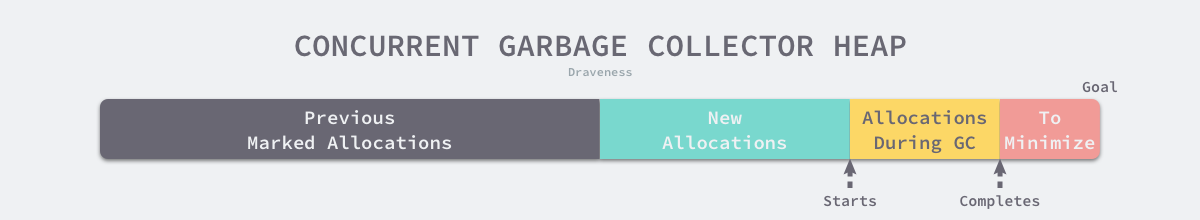

因为并发垃圾收集器会与程序一起运行,所以它无法准确的控制堆内存的大小,并发收集器需要在达到目标前触发垃圾收集,这样才能够保证内存大小的可控,并发收集器需要尽可能保证垃圾收集结束时的堆内存与用户配置的 GOGC 一致。

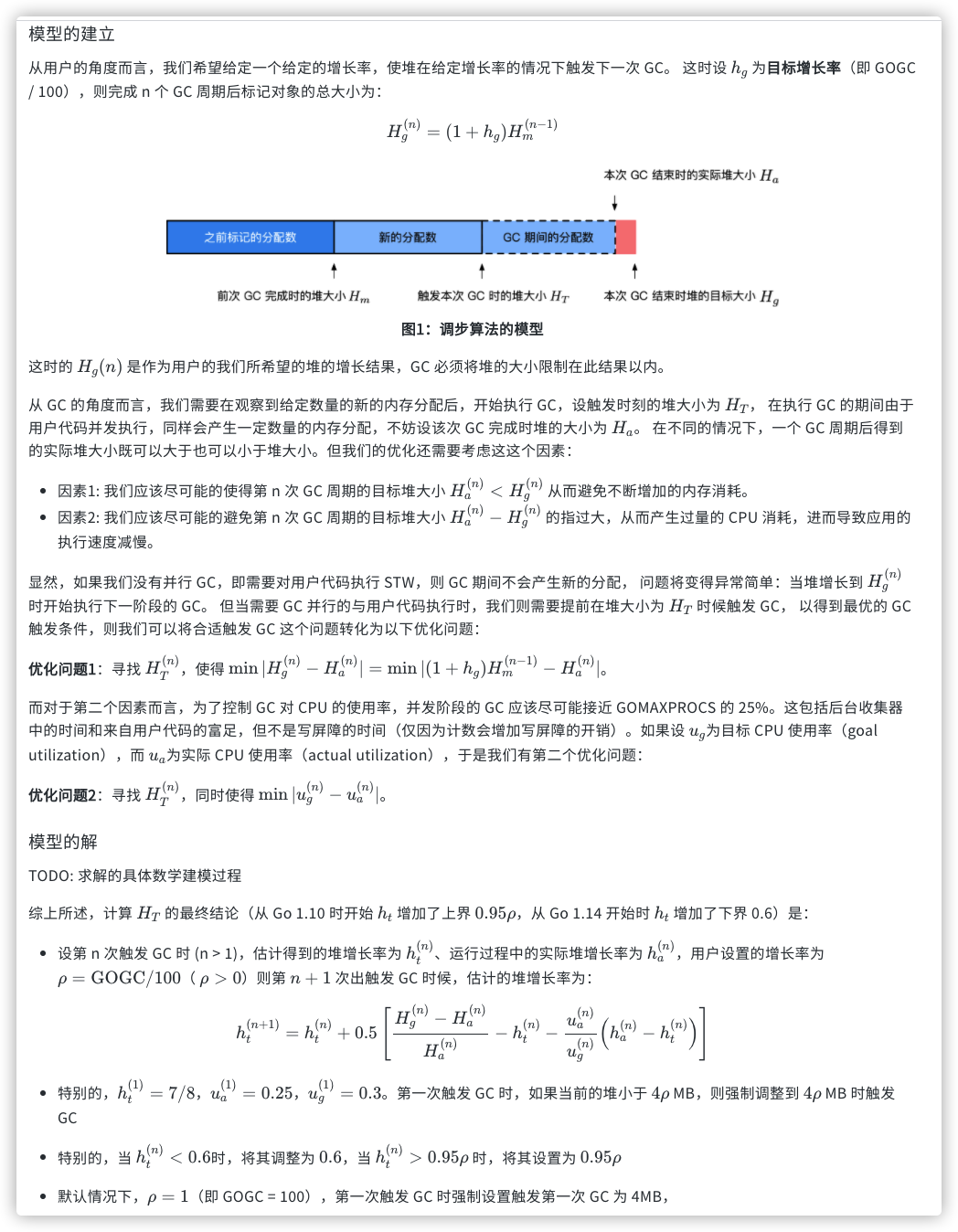

Go 语言 v1.5 引入并发垃圾收集器的同时使用垃圾收集调步(Pacing)算法计算触发的垃圾收集的最佳时间,确保触发的时间既不会浪费计算资源,也不会超出预期的堆大小。如上图所示,其中黑色的部分是上一次垃圾收集后标记的堆大小,绿色部分是上次垃圾收集结束后新分配的内存,因为我们使用并发垃圾收集,所以黄色的部分就是在垃圾收集期间分配的内存,最后的红色部分是垃圾收集结束时与目标的差值,我们希望尽可能减少红色部分内存,降低垃圾收集带来的额外开销以及程序的暂停时间。

垃圾收集调步算法是跟随 v1.5 一同引入的,该算法的目标是优化堆的增长速度和垃圾收集器的 CPU 利用率,而在 v1.10 版本中又对该算法进行了优化,将原有的目的堆大小拆分成了软硬两个目标.

调步算法包含四个部分:

- GC 周期所需的扫描估计器

- 为用户代码根据堆分配到目标堆大小的时间估计扫描工作量的机制

- 用户代码为未充分利用 CPU 预算时进行后台扫描的调度程序

- GC 触发比率的控制器

现在我们从两个不同的视角来对这个问题进行建模。

Ht的时候开始GC,Ha的时候结束GC,Ha非常接近Hg。

如何保证在Ht开始gc时所有的span都被清扫完?

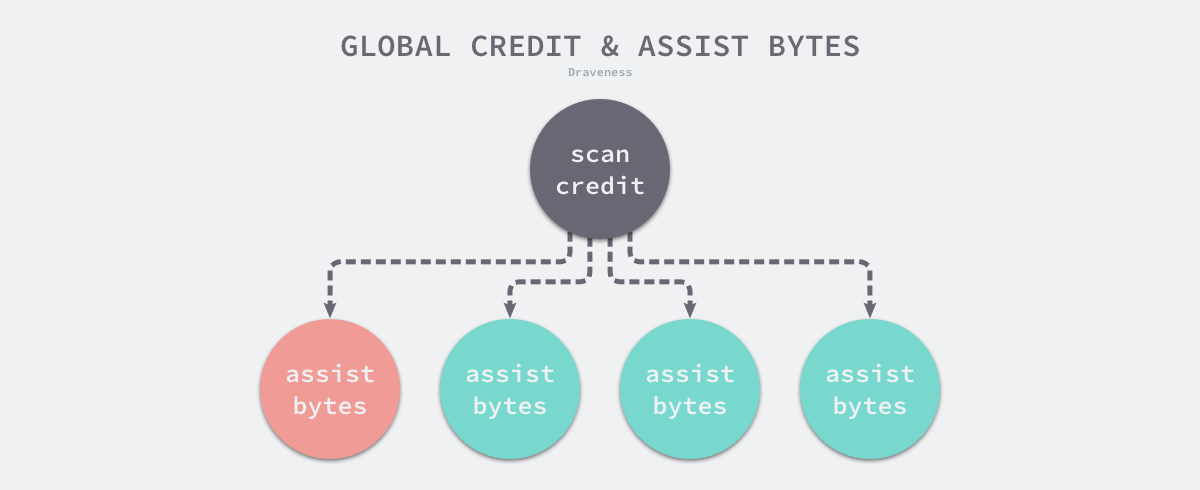

除了有一个后台清扫协程外,用户在分配内存时也需要辅助清扫来保证在开启下一轮的gc时span都被清扫完毕。假设有k page的span需要sweep,那么距离下一次gc还有Ht-Hm(n-1)的内存可供分配,那么平均每申请1byte内存需要清扫k/ Ht-Hm(n-1) page 的span。(k值会根据sweep进度更改)

辅助清扫申请新span时才会检查,辅助清扫的触发可以看cacheSpan函数, 触发时G会帮助回收"工作量"页的对象.

1

|

spanBytes * sweepPagesPerByte

|

意思是分配的大小乘以系数sweepPagesPerByte, sweepPagesPerByte的计算在函数gcSetTriggerRatio中.

如何保证在Ha时gc都被mark完?

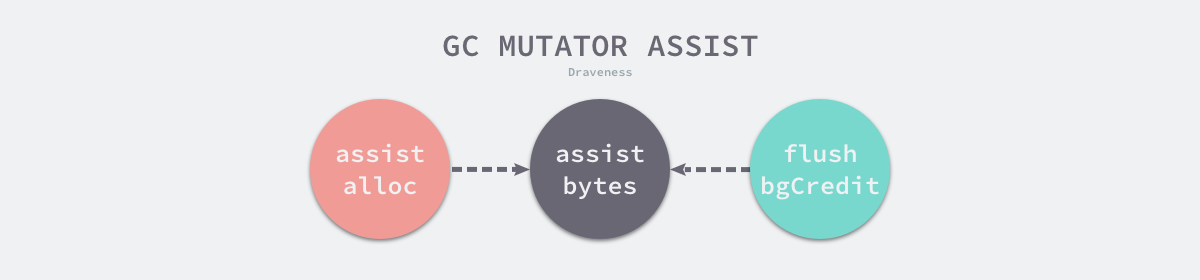

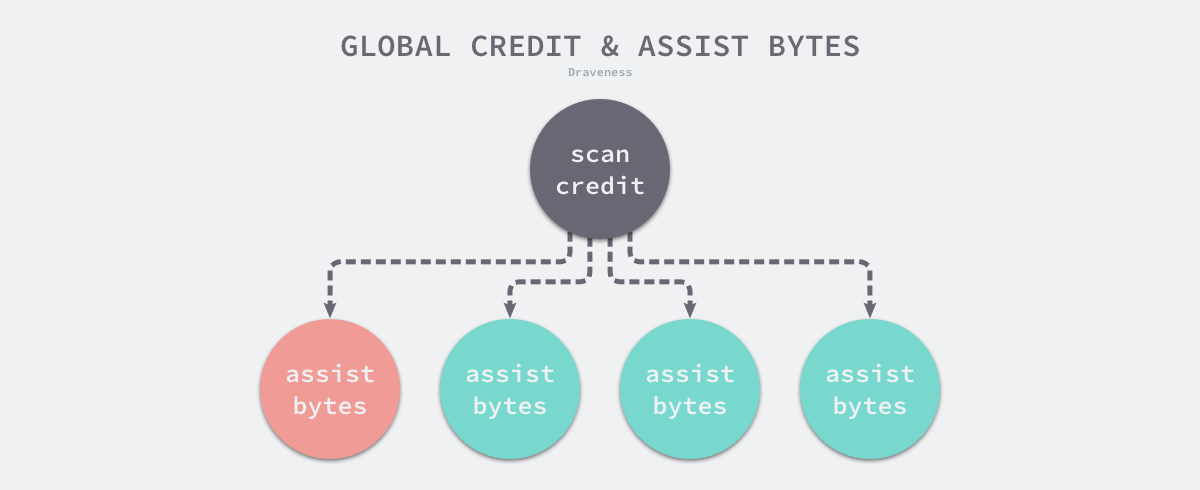

Gc在Ht开始,在到达Hg时尽量标记完所有的对象,除了后台的标记协程外还需要在分配内存是进行辅助mark。从Ht到Hg的内存可以分配,这个时候还有scanWorkExpected的对象需要scan,那么平均分配1byte内存需要辅助mark量:scanWorkExpected/(Hg-Ht) 个对象,scanWorkExpected会根据mark进度更改。

辅助标记的触发可以查看上面的mallocgc函数, 触发时G会帮助扫描"工作量"个对象, 工作量的计算公式是:

1

|

debtBytes * assistWorkPerByte

|

意思是分配的大小乘以系数assistWorkPerByte, assistWorkPerByte的计算在函数revise中.

流程图

垃圾回收代码流程

- gcStart -> gcBgMarkWorker && gcRootPrepare,这时 gcBgMarkWorker 在休眠中

- schedule -> findRunnableGCWorker 唤醒适宜数量的 gcBgMarkWorker

- gcBgMarkWorker -> gcDrain -> scanobject -> greyobject(set mark bit and put to gcw)

- 在 gcBgMarkWorker 中调用 gcMarkDone 排空各种 wbBuf 后,使用分布式 termination 检查算法,进入 gcMarkTermination -> gcSweep 唤醒后台沉睡的 sweepg 和 scvg -> sweep -> wake bgsweep && bgscavenge

全局变量

在垃圾收集中有一些比较重要的全局变量,在分析其过程之前,我们会先逐一介绍这些重要的变量,这些变量在垃圾收集的各个阶段中会反复出现,所以理解他们的功能是非常重要的,我们先介绍一些比较简单的变量:

- runtime.gcphase 是垃圾收集器当前处于的阶段,可能处于 _GCoff、_GCmark 和 _GCmarktermination,Goroutine 在读取或者修改该阶段时需要保证原子性;

- runtime.gcBlackenEnabled 是一个布尔值,当垃圾收集处于标记阶段时,该变量会被置为 1,在这里辅助垃圾收集的用户程序和后台标记的任务可以将对象涂黑;

- runtime.gcController 实现了垃圾收集的调步算法,它能够决定触发并行垃圾收集的时间和待处理的工作;

- runtime.gcpercent 是触发垃圾收集的内存增长百分比,默认情况下为 100,即堆内存相比上次垃圾收集增长 100% 时应该触发 GC,并行的垃圾收集器会在到达该目标前完成垃圾收集;

- runtime.writeBarrier 是一个包含写屏障状态的结构体,其中的 enabled 字段表示写屏障的开启与关闭;

- runtime.worldsema 是全局的信号量,获取该信号量的线程有权利暂停当前应用程序;

除了上述全局的变量之外,我们在这里还需要简单了解一下 runtime.work 变量:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

|

var work struct {

full lfstack // lock-free list of full blocks workbuf

empty lfstack // lock-free list of empty blocks workbuf

pad0 cpu.CacheLinePad // prevents false-sharing between full/empty and nproc/nwait

wbufSpans struct {

lock mutex

// free is a list of spans dedicated to workbufs, but

// that don't currently contain any workbufs.

free mSpanList

// busy is a list of all spans containing workbufs on

// one of the workbuf lists.

busy mSpanList

}

// Restore 64-bit alignment on 32-bit.

_ uint32

// bytesMarked is the number of bytes marked this cycle. This

// includes bytes blackened in scanned objects, noscan objects

// that go straight to black, and permagrey objects scanned by

// markroot during the concurrent scan phase. This is updated

// atomically during the cycle. Updates may be batched

// arbitrarily, since the value is only read at the end of the

// cycle.

//

// Because of benign races during marking, this number may not

// be the exact number of marked bytes, but it should be very

// close.

//

// Put this field here because it needs 64-bit atomic access

// (and thus 8-byte alignment even on 32-bit architectures).

bytesMarked uint64

markrootNext uint32 // next markroot job

markrootJobs uint32 // number of markroot jobs

nproc uint32

tstart int64

nwait uint32

ndone uint32

// Number of roots of various root types. Set by gcMarkRootPrepare.

nFlushCacheRoots int

nDataRoots, nBSSRoots, nSpanRoots, nStackRoots int

// Each type of GC state transition is protected by a lock.

// Since multiple threads can simultaneously detect the state

// transition condition, any thread that detects a transition

// condition must acquire the appropriate transition lock,

// re-check the transition condition and return if it no

// longer holds or perform the transition if it does.

// Likewise, any transition must invalidate the transition

// condition before releasing the lock. This ensures that each

// transition is performed by exactly one thread and threads

// that need the transition to happen block until it has

// happened.

//

// startSema protects the transition from "off" to mark or

// mark termination.

startSema uint32

// markDoneSema protects transitions from mark to mark termination.

markDoneSema uint32

bgMarkReady note // signal background mark worker has started

bgMarkDone uint32 // cas to 1 when at a background mark completion point

// Background mark completion signaling

// mode is the concurrency mode of the current GC cycle.

mode gcMode

// userForced indicates the current GC cycle was forced by an

// explicit user call.

userForced bool

// totaltime is the CPU nanoseconds spent in GC since the

// program started if debug.gctrace > 0.

totaltime int64

// initialHeapLive is the value of memstats.heap_live at the

// beginning of this GC cycle.

initialHeapLive uint64

// assistQueue is a queue of assists that are blocked because

// there was neither enough credit to steal or enough work to

// do.

assistQueue struct {

lock mutex

q gQueue

}

// sweepWaiters is a list of blocked goroutines to wake when

// we transition from mark termination to sweep.

sweepWaiters struct {

lock mutex

list gList

}

// cycles is the number of completed GC cycles, where a GC

// cycle is sweep termination, mark, mark termination, and

// sweep. This differs from memstats.numgc, which is

// incremented at mark termination.

cycles uint32

// Timing/utilization stats for this cycle.

stwprocs, maxprocs int32

tSweepTerm, tMark, tMarkTerm, tEnd int64 // nanotime() of phase start

pauseNS int64 // total STW time this cycle

pauseStart int64 // nanotime() of last STW

// debug.gctrace heap sizes for this cycle.

heap0, heap1, heap2, heapGoal uint64

}

|

该结构体中包含大量垃圾收集的相关字段,例如:表示完成的垃圾收集循环的次数、当前循环时间和 CPU 的利用率、垃圾收集的模式等等,我们会在后面的小节中见到该结构体中的更多的字段。

根对象

在GC的标记阶段首先需要标记的就是"根对象", 从根对象开始可到达的所有对象都会被认为是存活的.

根对象包含了全局变量, 各个G的栈上的变量等, GC会先扫描根对象然后再扫描根对象可到达的所有对象.

- Fixed Roots: 特殊的扫描工作 :

- fixedRootFinalizers: 扫描析构器队列

- fixedRootFreeGStacks: 释放已中止的G的栈

- Flush Cache Roots: 释放mcache中的所有span, 要求STW

- Data Roots: 扫描可读写的全局变量

- BSS Roots: 扫描只读的全局变量

- Span Roots: 扫描各个span中特殊对象(析构器列表)

- Stack Roots: 扫描各个G的栈

标记阶段(Mark)会做其中的"Fixed Roots", “Data Roots”, “BSS Roots”, “Span Roots”, “Stack Roots”.

完成标记阶段(Mark Termination)会做其中的"Fixed Roots", “Flush Cache Roots”.

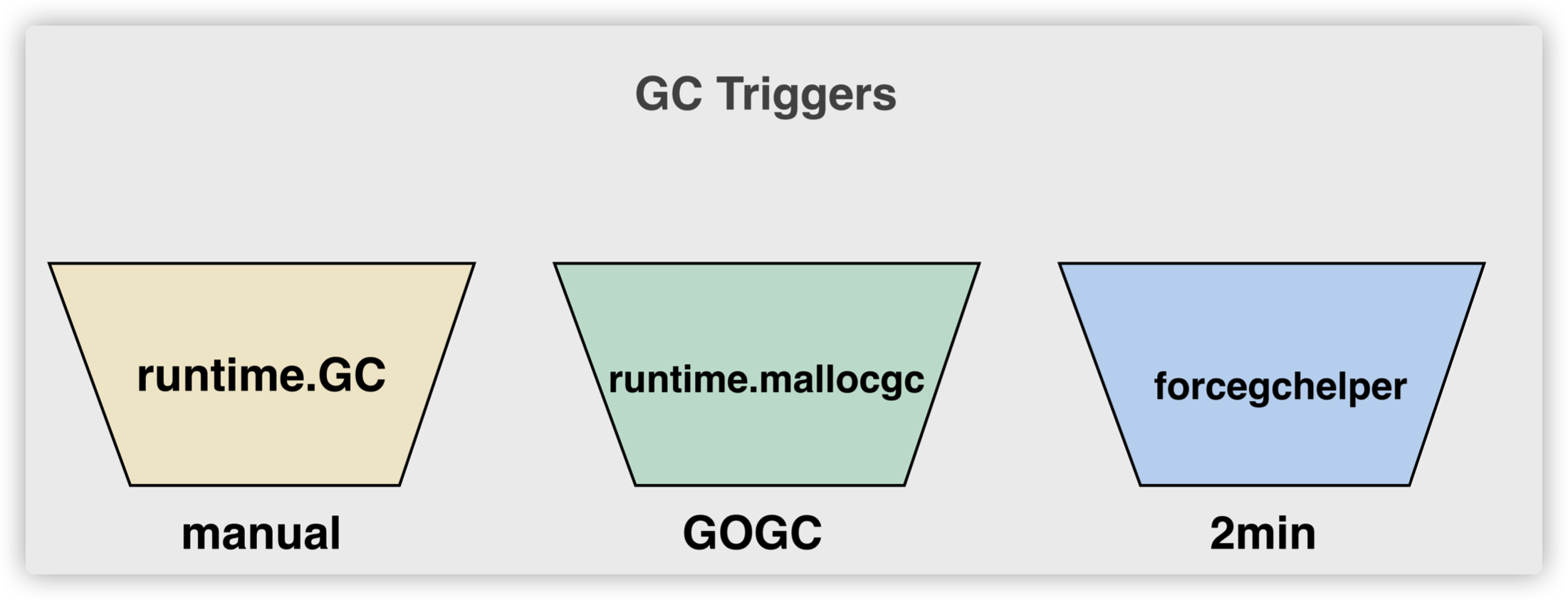

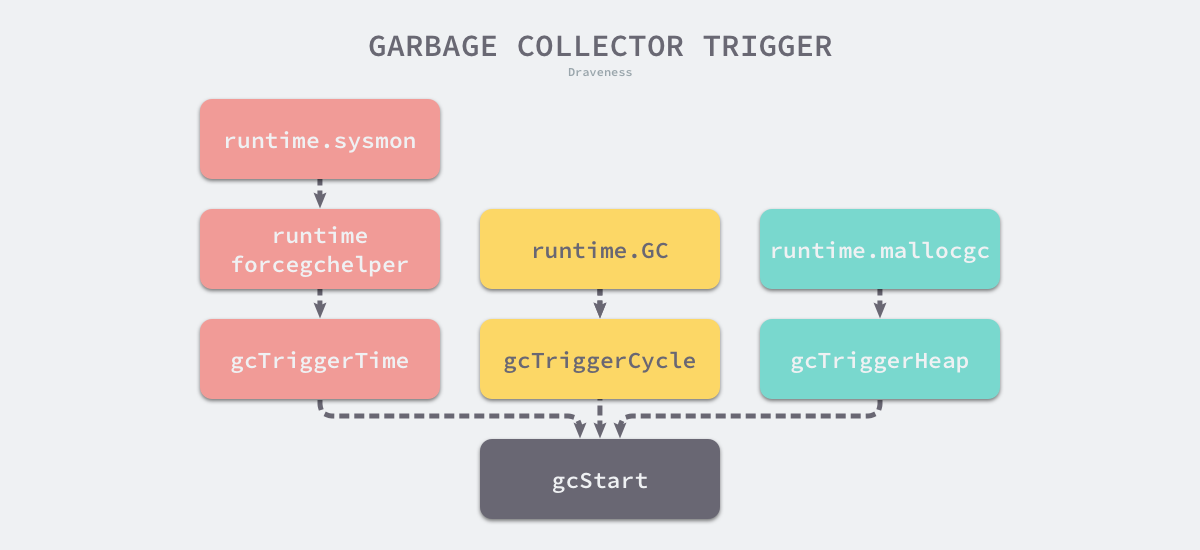

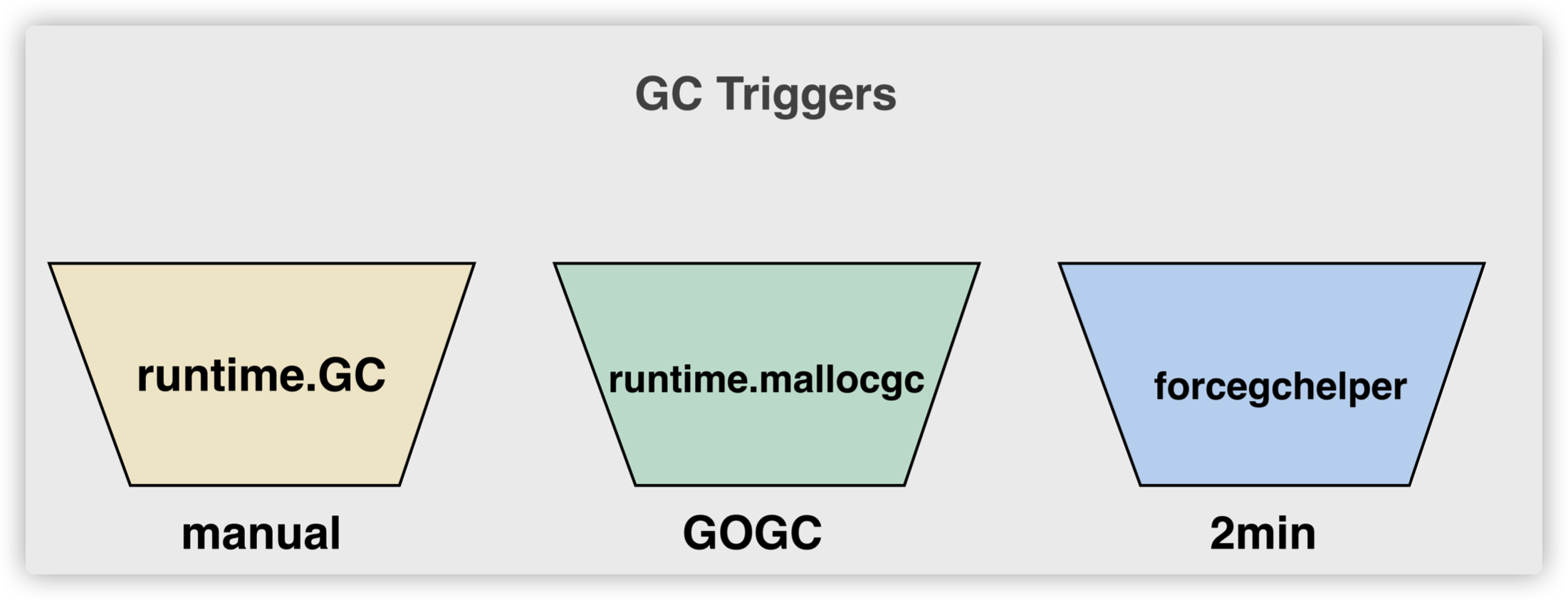

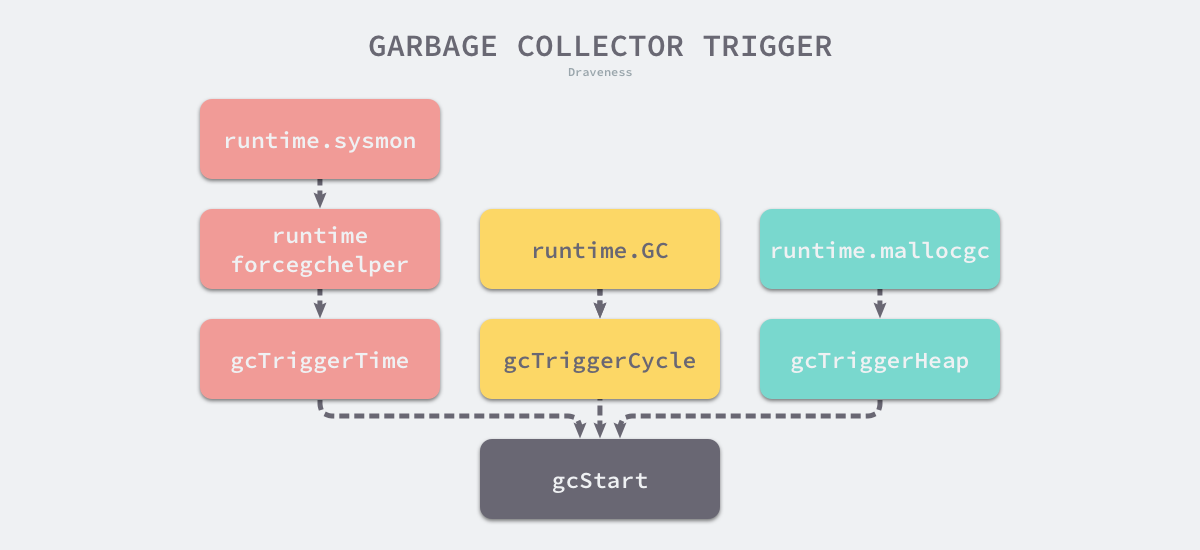

触发时机

gcTrigger

运行时会通过如下所示的 runtime.gcTrigger.test 方法决定是否需要触发垃圾收集,当满足触发垃圾收集的基本条件时 — 允许垃圾收集、程序没有崩溃并且没有处于垃圾收集循环,该方法会根据三种不同的方式触发进行不同的检查:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

|

// gcTrigger 是一个 GC 周期开始的谓词。具体而言,它是一个 _GCoff 阶段的退出条件

type gcTrigger struct {

kind gcTriggerKind

now int64 // gcTriggerTime: 当前时间

n uint32 // gcTriggerCycle: 开始的周期数

}

type gcTriggerKind int

const (

// gcTriggerHeap 表示当堆大小达到控制器计算的触发堆大小时开始一个周期

gcTriggerHeap gcTriggerKind = iota

// gcTriggerTime 表示自上一个 GC 周期后当循环超过

// forcegcperiod 纳秒时应开始一个周期。

gcTriggerTime

// gcTriggerCycle 表示如果我们还没有启动第 gcTrigger.n 个周期

// (相对于 work.cycles)时应开始一个周期。

gcTriggerCycle

)

// test 报告当前出发条件是否满足,换句话说 _GCoff 阶段的退出条件已满足。

// 退出条件应该在分配阶段已完成测试。

func (t gcTrigger) test() bool {

// 如果已禁用 GC

if !memstats.enablegc || panicking != 0 || gcphase != _GCoff {

return false

}

// 根据类别做不同判断

switch t.kind {

case gcTriggerHeap:

// 上个周期结束时剩余的加上到目前为止分配的内存 超过 触发标记阶段标准的内存

// 考虑性能问题,对非原子操作访问 heap_live 。如果我们需要触发该条件,

// 则所在线程一定会原子的写入 heap_live,从而我们会观察到我们的写入。

return memstats.heap_live >= memstats.gc_trigger

case gcTriggerTime:

// 因为允许在运行时动态调整 gcpercent,因此需要额外再检查一遍

if gcpercent < 0 {

return false

}

// 计算上次 gc 开始时间是否大于强制执行 GC 周期的时间

lastgc := int64(atomic.Load64(&memstats.last_gc_nanotime))

return lastgc != 0 && t.now-lastgc > forcegcperiod// 两分钟

case gcTriggerCycle:

// 进行测试的周期 t.n 大于实际触发的,需要进行 GC 则通过测试

return int32(t.n-work.cycles) > 0

}

return true

}

|

- gcTriggerHeap — 堆内存的分配达到控制器计算的触发堆大小;

- gcTriggerTime — 如果一定时间内没有触发,就会触发新的循环,该出发条件由 runtime.forcegcperiod 变量控制,默认为 2 分钟;

- gcTriggerCycle — 如果当前没有开启垃圾收集,则触发新的循环;

用于开启垃圾收集的方法 runtime.gcStart 会接收一个 runtime.gcTrigger 类型的谓词,我们可以根据这个触发_GCoff 退出的结构体找到所有触发的垃圾收集的代码:

- runtime.sysmon 和 runtime.forcegchelper — 后台运行定时检查和垃圾收集;

- runtime.GC — 用户程序手动触发垃圾收集;

- runtime.mallocgc — 申请内存时根据堆大小触发垃圾收集;

通过堆内存触发垃圾收集需要比较 runtime.mstats 中的两个字段:

- heap_live:表示垃圾收集中存活对象的字节数

- gc_trigger:表示触发标记的堆内存的大小

当内存中存活的对象字节数大于触发垃圾收集的堆大小时,新一轮的垃圾收集就会开始。

在这里,我们将分别介绍这两个值的计算过程:

- heap_live — 为了减少锁竞争,运行时只会在中心缓存分配或者释放内存管理单元以及在堆上分配大对象时才会更新;

- gc_trigger — 在标记终止阶段调用 runtime.gcSetTriggerRatio 更新触发下一次垃圾收集的堆大小;

runtime.gcController 会在每个循环结束后计算触发比例并通过 runtime.gcSetTriggerRatio 设置 gc_trigger,它能够决定触发垃圾收集的时间以及用户程序和后台处理的标记任务的多少,利用反馈控制的算法根据堆的增长情况和垃圾收集 CPU 利用率确定触发垃圾收集的时机。

后台触发

运行时会在应用程序启动时在后台开启一个用于强制触发垃圾收集的 Goroutine,该 Goroutine 的职责非常简单 — 调用 runtime.gcStart 方法尝试启动新一轮的垃圾收集:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

func init() {

go forcegchelper()

}

func forcegchelper() {

forcegc.g = getg()

lockInit(&forcegc.lock, lockRankForcegc)

for {

lock(&forcegc.lock)

if forcegc.idle != 0 {

throw("forcegc: phase error")

}

atomic.Store(&forcegc.idle, 1)

goparkunlock(&forcegc.lock, waitReasonForceGCIdle, traceEvGoBlock, 1)

// this goroutine is explicitly resumed by sysmon

if debug.gctrace > 0 {

println("GC forced")

}

// Time-triggered, fully concurrent.

gcStart(gcTrigger{kind: gcTriggerTime, now: nanotime()})

}

}

|

为了减少对计算资源的占用,该 Goroutine 会在循环中调用 runtime.goparkunlock 主动陷入休眠等待其他 Goroutine 的唤醒,runtime.forcegchelper 在大多数时间都是陷入休眠的,但是它会被系统监控器 runtime.sysmon 在满足垃圾收集条件时唤醒:

1

2

3

4

5

|

// Puts the current goroutine into a waiting state and unlocks the lock.

// The goroutine can be made runnable again by calling goready(gp).

func goparkunlock(lock *mutex, reason waitReason, traceEv byte, traceskip int) {

gopark(parkunlock_c, unsafe.Pointer(lock), reason, traceEv, traceskip)

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

func sysmon() {

...

for {

...

if t := (gcTrigger{kind: gcTriggerTime, now: now}); t.test() && atomic.Load(&forcegc.idle) != 0 {

lock(&forcegc.lock)

forcegc.idle = 0

var list gList

list.push(forcegc.g)

injectglist(&list)

unlock(&forcegc.lock)

}

}

}

|

系统监控在每个循环中都会主动构建一个 runtime.gcTrigger 并检查垃圾收集的触发条件是否满足,如果满足条件,系统监控会将 runtime.forcegc 状态中持有的 Goroutine 加入全局队列等待调度器的调度。

手动触发

用户程序会通过 runtime.GC 函数在程序运行期间主动通知运行时执行,该方法在调用时会阻塞调用方直到当前垃圾收集循环完成,在垃圾收集期间也可能会通过 STW 暂停整个程序:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

|

// GC runs a garbage collection and blocks the caller until the

// garbage collection is complete. It may also block the entire

// program.

func GC() {

// We consider a cycle to be: sweep termination, mark, mark

// termination, and sweep. This function shouldn't return

// until a full cycle has been completed, from beginning to

// end. Hence, we always want to finish up the current cycle

// and start a new one. That means:

//

// 1. In sweep termination, mark, or mark termination of cycle

// N, wait until mark termination N completes and transitions

// to sweep N.

//

// 2. In sweep N, help with sweep N.

//

// At this point we can begin a full cycle N+1.

//

// 3. Trigger cycle N+1 by starting sweep termination N+1.

//

// 4. Wait for mark termination N+1 to complete.

//

// 5. Help with sweep N+1 until it's done.

//

// This all has to be written to deal with the fact that the

// GC may move ahead on its own. For example, when we block

// until mark termination N, we may wake up in cycle N+2.

// Wait until the current sweep termination, mark, and mark

// termination complete.

n := atomic.Load(&work.cycles)

//在正式开始垃圾收集前,运行时需要通过 runtime.gcWaitOnMark 函数等待上一个循环的标记终止、标记和标记终止阶段完成;

gcWaitOnMark(n)

// We're now in sweep N or later. Trigger GC cycle N+1, which

// will first finish sweep N if necessary and then enter sweep

// termination N+1.

//调用 runtime.gcStart 触发新一轮的垃圾收集

gcStart(gcTrigger{kind: gcTriggerCycle, n: n + 1})

// Wait for mark termination N+1 to complete.

//通过 runtime.gcWaitOnMark 等待该轮垃圾收集的标记终止阶段正常结束;

gcWaitOnMark(n + 1)

// Finish sweep N+1 before returning. We do this both to

// complete the cycle and because runtime.GC() is often used

// as part of tests and benchmarks to get the system into a

// relatively stable and isolated state.

//持续调用 runtime.sweepone 清理全部待处理的内存管理单元并等待所有的清理工作完成,等待期间会调用 runtime.Gosched 让出处理器;

for atomic.Load(&work.cycles) == n+1 && sweepone() != ^uintptr(0) {

sweep.nbgsweep++

Gosched()

}

// Callers may assume that the heap profile reflects the

// just-completed cycle when this returns (historically this

// happened because this was a STW GC), but right now the

// profile still reflects mark termination N, not N+1.

//

// As soon as all of the sweep frees from cycle N+1 are done,

// we can go ahead and publish the heap profile.

//

// First, wait for sweeping to finish. (We know there are no

// more spans on the sweep queue, but we may be concurrently

// sweeping spans, so we have to wait.)

for atomic.Load(&work.cycles) == n+1 && atomic.Load(&mheap_.sweepers) != 0 {

Gosched()

}

// Now we're really done with sweeping, so we can publish the

// stable heap profile. Only do this if we haven't already hit

// another mark termination.

mp := acquirem()

cycle := atomic.Load(&work.cycles)

//完成本轮垃圾收集的清理工作后,通过 runtime.mProf_PostSweep 将该阶段的堆内存状态快照发布出来,我们可以获取这时的内存状态;

if cycle == n+1 || (gcphase == _GCmark && cycle == n+2) {

mProf_PostSweep()

}

releasem(mp)

}

|

手动触发垃圾收集的过程不是特别常见,一般只会在运行时的测试代码中才会出现,不过如果我们认为触发主动垃圾收集是有必要的,我们也可以直接调用该方法,但是作者并不认为这是一种推荐的做法。

申请内存

最后一个可能会触发垃圾收集的就是 runtime.mallocgc 函数了,我们在内存分配器中曾经介绍过运行时会将堆上的对象按大小分成微对象、小对象和大对象三类,这三类对象的创建都可能会触发新的垃圾收集循环:

- 当前线程的内存管理单元中不存在空闲空间时,创建微对象和小对象需要调用

runtime.mcache.nextFree 方法从中心缓存或者页堆中获取新的管理单元,在这时就可能触发垃圾收集;

- 当用户程序申请分配 32KB 以上的大对象时,一定会构建 runtime.gcTrigger 结构体尝试触发 垃圾收集;

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

shouldhelpgc := false

...

if size <= maxSmallSize {

if noscan && size < maxTinySize {

...

v := nextFreeFast(span)

if v == 0 {

v, _, shouldhelpgc = c.nextFree(tinySpanClass)

}

...

} else {

...

v := nextFreeFast(span)

if v == 0 {

v, span, shouldhelpgc = c.nextFree(spc)

}

...

}

} else {

shouldhelpgc = true

...

}

...

if shouldhelpgc {

if t := (gcTrigger{kind: gcTriggerHeap}); t.test() {

gcStart(t)

}

}

return x

}

|

垃圾收集启动:gcStart

垃圾收集在启动过程一定会调用 runtime.gcStart 函数,虽然该函数的实现比较复杂,但是它的主要职责就是修改全局的垃圾收集状态到 _GCmark 并做一些准备工作,我们会分以下几个阶段介绍该函数的实现:

- 两次调用 runtime.gcTrigger.test 方法检查是否满足垃圾收集条件;

- 暂停程序、在后台启动用于处理标记任务的工作 Goroutine、确定所有内存管理单元都被清理以及其他标记阶段开始前的准备工作;

- 进入标记阶段、准备后台的标记工作、根对象的标记工作以及微对象、恢复用户程序,进入并发扫描和标记阶段;

验证垃圾收集条件的同时,该方法还会在循环中不断调用 runtime.sweepone 清理已经被标记的内存单元,完成上一个垃圾收集循环的收尾工作:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

|

// gcStart starts the GC. It transitions from _GCoff to _GCmark (if

// debug.gcstoptheworld == 0) or performs all of GC (if

// debug.gcstoptheworld != 0).

//

// This may return without performing this transition in some cases,

// such as when called on a system stack or with locks held.

func gcStart(trigger gcTrigger) {

// 判断当前G是否可抢占, 不可抢占时不触发GC

// Since this is called from malloc and malloc is called in

// the guts of a number of libraries that might be holding

// locks, don't attempt to start GC in non-preemptible or

// potentially unstable situations.

mp := acquirem()

if gp := getg(); gp == mp.g0 || mp.locks > 1 || mp.preemptoff != "" {

releasem(mp)

return

}

releasem(mp)

mp = nil

// Pick up the remaining unswept/not being swept spans concurrently

//

// This shouldn't happen if we're being invoked in background

// mode since proportional sweep should have just finished

// sweeping everything, but rounding errors, etc, may leave a

// few spans unswept. In forced mode, this is necessary since

// GC can be forced at any point in the sweeping cycle.

//

// We check the transition condition continuously here in case

// this G gets delayed in to the next GC cycle.

//验证垃圾收集条件的同时,该方法还会在循环中不断调用 runtime.sweepone 清理已经被标记的内存单元,完成上一个垃圾收集循环的收尾工作:

for trigger.test() && sweepone() != ^uintptr(0) {

sweep.nbgsweep++

}

//在验证了垃圾收集的条件并完成了收尾工作后,该方法会通过 semacquire 获取全局的 worldsema 信号量

// Perform GC initialization and the sweep termination

// transition.

semacquire(&work.startSema)

// Re-check transition condition under transition lock.

//重新检查gcTrigger的条件是否成立, 不成立时不触发GC

if !trigger.test() {

semrelease(&work.startSema)

return

}

// For stats, check if this GC was forced by the user.

// 记录是否强制触发, gcTriggerCycle是runtime.GC用的

work.userForced = trigger.kind == gcTriggerCycle

// In gcstoptheworld debug mode, upgrade the mode accordingly.

// We do this after re-checking the transition condition so

// that multiple goroutines that detect the heap trigger don't

// start multiple STW GCs.

// 判断是否指定了禁止并行GC的参数

mode := gcBackgroundMode

if debug.gcstoptheworld == 1 {

mode = gcForceMode

} else if debug.gcstoptheworld == 2 {

mode = gcForceBlockMode

}

// Ok, we're doing it! Stop everybody else

semacquire(&gcsema)

semacquire(&worldsema)

// 跟踪处理

if trace.enabled {

traceGCStart()

}

// Check that all Ps have finished deferred mcache flushes.

for _, p := range allp {

if fg := atomic.Load(&p.mcache.flushGen); fg != mheap_.sweepgen {

println("runtime: p", p.id, "flushGen", fg, "!= sweepgen", mheap_.sweepgen)

throw("p mcache not flushed")

}

}

//调用 runtime.gcBgMarkStartWorkers 启动后台标记任务

gcBgMarkStartWorkers()

// 重置标记相关的状态

systemstack(gcResetMarkState)

//修改全局变量 runtime.work 持有的状态,包括垃圾收集需要的 Goroutine 数量以及已完成的循环数。

work.stwprocs, work.maxprocs = gomaxprocs, gomaxprocs

if work.stwprocs > ncpu {

// This is used to compute CPU time of the STW phases,

// so it can't be more than ncpu, even if GOMAXPROCS is.

work.stwprocs = ncpu

}

work.heap0 = atomic.Load64(&memstats.heap_live)

work.pauseNS = 0

work.mode = mode

// 记录开始时间

now := nanotime()

work.tSweepTerm = now

work.pauseStart = now

if trace.enabled {

traceGCSTWStart(1)

}

//在系统栈中调用 runtime.stopTheWorldWithSema 停止世界

systemstack(stopTheWorldWithSema)

// Finish sweep before we start concurrent scan.

// 清扫上一轮GC未清扫的span, 确保上一轮GC已完成

systemstack(func() {

finishsweep_m()

})

// clearpools before we start the GC. If we wait they memory will not be

// reclaimed until the next GC cycle.

// 清扫sched.sudogcache和sched.deferpool

clearpools()

// 增加GC计数

work.cycles++

// 标记新一轮GC已开始

gcController.startCycle()

work.heapGoal = memstats.next_gc

// In STW mode, disable scheduling of user Gs. This may also

// disable scheduling of this goroutine, so it may block as

// soon as we start the world again.

if mode != gcBackgroundMode {

schedEnableUser(false)

}

// Enter concurrent mark phase and enable

// write barriers.

//

// Because the world is stopped, all Ps will

// observe that write barriers are enabled by

// the time we start the world and begin

// scanning.

//

// Write barriers must be enabled before assists are

// enabled because they must be enabled before

// any non-leaf heap objects are marked. Since

// allocations are blocked until assists can

// happen, we want enable assists as early as

// possible.

//在完成全部的准备工作后,该方法就进入了执行的最后阶段。在该阶段中,我们会修改全局的垃圾收集状态到 _GCmark 并依次执行下面的步骤:

setGCPhase(_GCmark)

//调用 runtime.gcBgMarkPrepare 函数初始化后台扫描需要的状态;

gcBgMarkPrepare() // Must happen before assist enable.

//调用 runtime.gcMarkRootPrepare 函数扫描栈上、全局变量等根对象并将它们加入队列;

gcMarkRootPrepare()

// Mark all active tinyalloc blocks. Since we're

// allocating from these, they need to be black like

// other allocations. The alternative is to blacken

// the tiny block on every allocation from it, which

// would slow down the tiny allocator.

// 标记所有tiny alloc等待合并的对象

gcMarkTinyAllocs()

// At this point all Ps have enabled the write

// barrier, thus maintaining the no white to

// black invariant. Enable mutator assists to

// put back-pressure on fast allocating

// mutators.

//设置全局变量 runtime.gcBlackenEnabled,用户程序和标记任务可以将对象涂黑;

// 启用辅助GC

atomic.Store(&gcBlackenEnabled, 1)

// Assists and workers can start the moment we start

// the world.

// 记录标记开始的时间

gcController.markStartTime = now

// In STW mode, we could block the instant systemstack

// returns, so make sure we're not preemptible.

mp = acquirem()

// Concurrent mark.

// 调用 runtime.startTheWorldWithSema 启动程序,后台任务也会开始标记堆中的对象;

// 重新启动世界

// 前面创建的后台标记任务会开始工作, 所有后台标记任务都完成工作后, 进入完成标记阶段

systemstack(func() {

now = startTheWorldWithSema(trace.enabled)

// 记录停止了多久, 和标记阶段开始的时间

work.pauseNS += now - work.pauseStart

work.tMark = now

})

// Release the world sema before Gosched() in STW mode

// because we will need to reacquire it later but before

// this goroutine becomes runnable again, and we could

// self-deadlock otherwise.

semrelease(&worldsema)

releasem(mp)

// Make sure we block instead of returning to user code

// in STW mode.

if mode != gcBackgroundMode {

Gosched()

}

semrelease(&work.startSema)

}

|

在分析垃圾收集的启动过程中,我们省略了几个关键的过程,其中包括暂停和恢复应用程序和后台任务的启动,下面将详细分析这几个过程的实现原理。

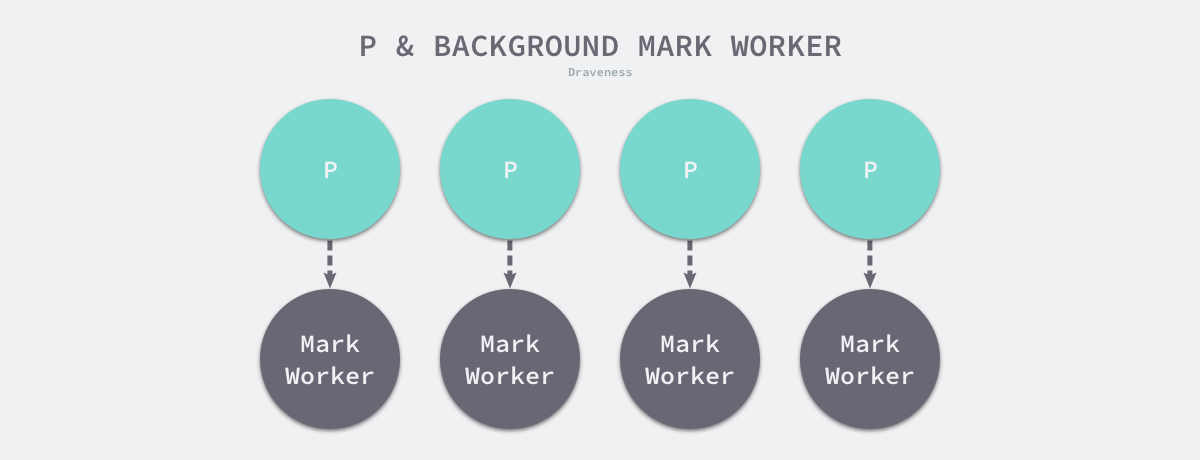

gcBgMarkStartWorkers

在垃圾收集启动期间,运行时会调用 runtime.gcBgMarkStartWorkers 为全局每个处理器创建用于执行后台标记任务的 Goroutine,这些 Goroutine 都会运行 runtime.gcBgMarkWorker,所有运行 runtime.gcBgMarkWorker 的 Goroutine 在启动后都会陷入休眠等待调度器的唤醒:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

// gcBgMarkStartWorkers prepares background mark worker goroutines.

// These goroutines will not run until the mark phase, but they must

// be started while the work is not stopped and from a regular G

// stack. The caller must hold worldsema.

func gcBgMarkStartWorkers() {

// Background marking is performed by per-P G's. Ensure that

// each P has a background GC G.

for _, p := range allp {

// 如果已启动则不重复启动

if p.gcBgMarkWorker == 0 {

go gcBgMarkWorker(p)

// 启动后等待该任务通知信号量bgMarkReady再继续

notetsleepg(&work.bgMarkReady, -1)

noteclear(&work.bgMarkReady)

}

}

//zhouyunjia

}

|

这些 Goroutine 与处理器是一一对应的关系

gcResetMarkState

gcResetMarkState函数会重置标记相关的状态:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

// gcResetMarkState resets global state prior to marking (concurrent

// or STW) and resets the stack scan state of all Gs.

//

// This is safe to do without the world stopped because any Gs created

// during or after this will start out in the reset state.

//

// gcResetMarkState must be called on the system stack because it acquires

// the heap lock. See mheap for details.

//

//go:systemstack

func gcResetMarkState() {

// This may be called during a concurrent phase, so make sure

// allgs doesn't change.

lock(&allglock)

for _, gp := range allgs {

gp.gcscandone = false // set to true in gcphasework

gp.gcAssistBytes = 0

}

unlock(&allglock)

// Clear page marks. This is just 1MB per 64GB of heap, so the

// time here is pretty trivial.

lock(&mheap_.lock)

arenas := mheap_.allArenas

unlock(&mheap_.lock)

for _, ai := range arenas {

ha := mheap_.arenas[ai.l1()][ai.l2()]

for i := range ha.pageMarks {

ha.pageMarks[i] = 0

}

}

work.bytesMarked = 0

work.initialHeapLive = atomic.Load64(&memstats.heap_live)

}

|

stopTheWorldWithSema

函数会停止整个世界, 这个函数必须在g0中运行:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

|

// stopTheWorldWithSema is the core implementation of stopTheWorld.

// The caller is responsible for acquiring worldsema and disabling

// preemption first and then should stopTheWorldWithSema on the system

// stack:

//

// semacquire(&worldsema, 0)

// m.preemptoff = "reason"

// systemstack(stopTheWorldWithSema)

//

// When finished, the caller must either call startTheWorld or undo

// these three operations separately:

//

// m.preemptoff = ""

// systemstack(startTheWorldWithSema)

// semrelease(&worldsema)

//

// It is allowed to acquire worldsema once and then execute multiple

// startTheWorldWithSema/stopTheWorldWithSema pairs.

// Other P's are able to execute between successive calls to

// startTheWorldWithSema and stopTheWorldWithSema.

// Holding worldsema causes any other goroutines invoking

// stopTheWorld to block.

func stopTheWorldWithSema() {

_g_ := getg()

// If we hold a lock, then we won't be able to stop another M

// that is blocked trying to acquire the lock.

if _g_.m.locks > 0 {

throw("stopTheWorld: holding locks")

}

lock(&sched.lock)

//因为程序中活跃的最大处理数为 gomaxprocs,所以在每次发现停止的处理器时都会对该变量减一,直到所有的处理器都停止运行。

// 设置stopwait的初始值为最大的 p 的个数

sched.stopwait = gomaxprocs

// 设置gc等待标记, 调度时看见此标记会进入等待

atomic.Store(&sched.gcwaiting, 1)

// 给所有的 p 发送抢占信号,如果成功,则对应的 p 进入 idle 状态

// 抢占所有运行中的G

preemptall()

//依次停止当前处理器、等待处于系统调用的处理器以及获取并抢占空闲的处理器,处理器的状态在该函数返回时都会被更新至_Pgcstop,等待垃圾收集器的重新唤醒。

// stop current P

_g_.m.p.ptr().status = _Pgcstop // Pgcstop is only diagnostic.

// 减少需要停止的P数量(当前的P算一个)

sched.stopwait--

// try to retake all P's in Psyscall status

// 抢占所有在Psyscall状态的P, 防止它们重新参与调度

// 遍历所有的 p 如果满足条件(p的状态为 _Psyscall)则释放这个 p , 并且把 p 的状态都设置成 _Pgcstop ; 然后stopwait--

for _, p := range allp {

s := p.status

if s == _Psyscall && atomic.Cas(&p.status, s, _Pgcstop) {

if trace.enabled {

traceGoSysBlock(p)

traceProcStop(p)

}

p.syscalltick++

sched.stopwait--

}

}

// stop idle P's

// 防止所有空闲的P重新参与调度

for {

//获取idle 状态的 p, 从 _Pidle list 获取

p := pidleget()

if p == nil {

break

}

// 把 p 状态设置为 _Pgcstop

p.status = _Pgcstop

// 计数 stopwait --

sched.stopwait--

}

wait := sched.stopwait > 0

unlock(&sched.lock)

// 如果仍有需要停止的P, 则等待它们停止

// wait for remaining P's to stop voluntarily

if wait {

for {

// wait for 100us, then try to re-preempt in case of any races

// 循环等待 + 抢占所有运行中的G

//notetsleep函数内部每隔一段时间就会返回:

if notetsleep(&sched.stopnote, 100*1000) {

noteclear(&sched.stopnote)

break

}

preemptall()

}

}

// sanity checks

// 逻辑正确性检查

bad := ""

if sched.stopwait != 0 {

bad = "stopTheWorld: not stopped (stopwait != 0)"

} else {

for _, p := range allp {

if p.status != _Pgcstop {

bad = "stopTheWorld: not stopped (status != _Pgcstop)"

}

}

}

if atomic.Load(&freezing) != 0 {

// Some other thread is panicking. This can cause the

// sanity checks above to fail if the panic happens in

// the signal handler on a stopped thread. Either way,

// we should halt this thread.

lock(&deadlock)

lock(&deadlock)

}

if bad != "" {

throw(bad)

}

// 到这里所有运行中的G都会变为待运行, 并且所有的P都不能被M获取

// 也就是说所有的go代码(除了当前的)都会停止运行, 并且不能运行新的go代码

}

func notetsleep(n *note, ns int64) bool {

gp := getg()

if gp != gp.m.g0 {

throw("notetsleep not on g0")

}

semacreate(gp.m)

return notetsleep_internal(n, ns, nil, 0)

}

|

暂停程序主要使用了 runtime.preemptall 函数,该函数会调用我们runtime.preemptone.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

// Tell all goroutines that they have been preempted and they should stop.

// This function is purely best-effort. It can fail to inform a goroutine if a

// processor just started running it.

// No locks need to be held.

// Returns true if preemption request was issued to at least one goroutine.

func preemptall() bool {

res := false

for _, _p_ := range allp {

if _p_.status != _Prunning {

continue

}

if preemptone(_p_) {

res = true

}

}

return res

}

|

notetsleep函数内部每隔一段时间就会返回:

1

|

return atomic.Load(key32(&n.key)) != 0 // n.key 为参数 &shced.stopnote.key的值

|

如果要想让返回值为 true 就需要满足上面的条件。 stopnote.key的值有两个函数可以控制:

- notewakeup 把 stopnote 设置为 1

- noteclear 把stopnote设置为 0

所以我们需要调用notewakeup才行。而这个函数我们可以看到是在gcstopm()有调用:

1

2

3

4

5

6

7

8

9

10

11

12

13

|

// Stops the current m for stopTheWorld.

// Returns when the world is restarted.

func gcstopm() {

...

lock(&sched.lock)

_p_.status = _Pgcstop

sched.stopwait--

if sched.stopwait == 0 {

notewakeup(&sched.stopnote)

}

unlock(&sched.lock)

stopm()

}

|

当sched.stopwait为0时,会唤醒notetsleep,继续执行下面的操作.

finishsweep_m

finishsweep_m函数会清扫上一轮GC未清扫的span, 确保上一轮GC已完成:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

// finishsweep_m ensures that all spans are swept.

//

// The world must be stopped. This ensures there are no sweeps in

// progress.

//

//go:nowritebarrier

func finishsweep_m() {

// sweepone会取出一个未sweep的span然后执行sweep

// Sweeping must be complete before marking commences, so

// sweep any unswept spans. If this is a concurrent GC, there

// shouldn't be any spans left to sweep, so this should finish

// instantly. If GC was forced before the concurrent sweep

// finished, there may be spans to sweep.

for sweepone() != ^uintptr(0) {

sweep.npausesweep++

}

if go115NewMCentralImpl {

// Reset all the unswept buffers, which should be empty.

// Do this in sweep termination as opposed to mark termination

// so that we can catch unswept spans and reclaim blocks as

// soon as possible.

sg := mheap_.sweepgen

for i := range mheap_.central {

c := &mheap_.central[i].mcentral

c.partialUnswept(sg).reset()

c.fullUnswept(sg).reset()

}

}

// Sweeping is done, so if the scavenger isn't already awake,

// wake it up. There's definitely work for it to do at this

// point.

wakeScavenger()

// 所有span都sweep完成后, 启动一个新的markbit时代

// 这个函数是实现span的gcmarkBits和allocBits的分配和复用的关键, 流程如下

// - span分配gcmarkBits和allocBits

// - span完成sweep

// - 原allocBits不再被使用

// - gcmarkBits变为allocBits

// - 分配新的gcmarkBits

// - 开启新的markbit时代

// - span完成sweep, 同上

// - 开启新的markbit时代

// - 2个时代之前的bitmap将不再被使用, 可以复用这些bitmap

nextMarkBitArenaEpoch()

}

|

clearpools

clearpools函数会清理sched.sudogcache和sched.deferpool, 让它们的内存可以被回收:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

func clearpools() {

// clear sync.Pools

if poolcleanup != nil {

poolcleanup()

}

// Clear central sudog cache.

// Leave per-P caches alone, they have strictly bounded size.

// Disconnect cached list before dropping it on the floor,

// so that a dangling ref to one entry does not pin all of them.

lock(&sched.sudoglock)

var sg, sgnext *sudog

for sg = sched.sudogcache; sg != nil; sg = sgnext {

sgnext = sg.next

sg.next = nil

}

sched.sudogcache = nil

unlock(&sched.sudoglock)

// Clear central defer pools.

// Leave per-P pools alone, they have strictly bounded size.

lock(&sched.deferlock)

for i := range sched.deferpool {

// disconnect cached list before dropping it on the floor,

// so that a dangling ref to one entry does not pin all of them.

var d, dlink *_defer

for d = sched.deferpool[i]; d != nil; d = dlink {

dlink = d.link

d.link = nil

}

sched.deferpool[i] = nil

}

unlock(&sched.deferlock)

}

|

startCycle

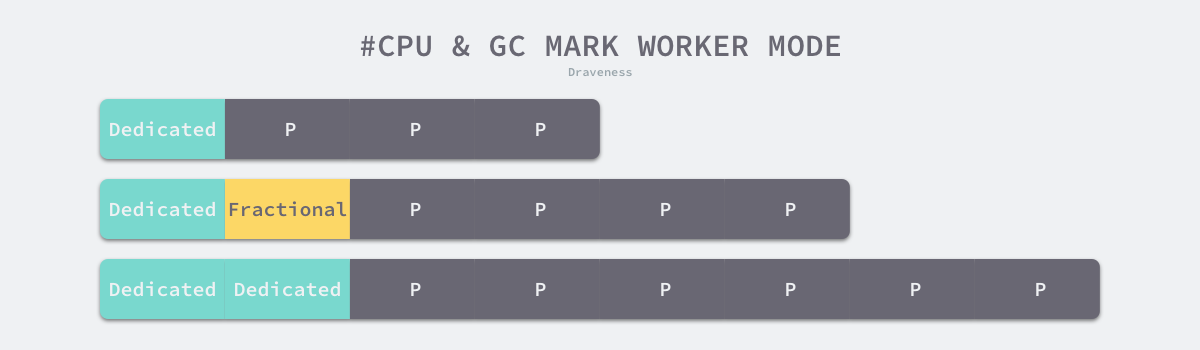

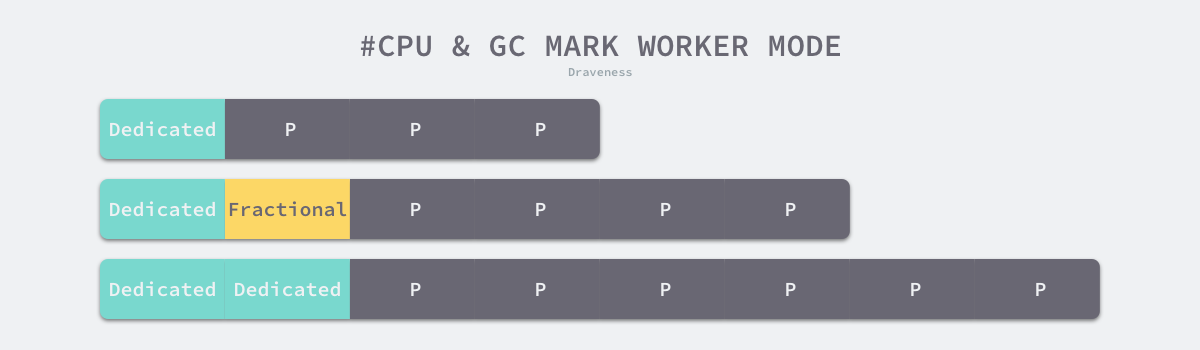

用于并发扫描对象的工作协程 Goroutine 总共有三种不同的模式 runtime.gcMarkWorkerMode,这三种不同模式的 Goroutine 在标记对象时使用完全不同的策略,垃圾收集控制器会按照需要执行不同类型的工作协程:

- gcMarkWorkerDedicatedMode — 处理器专门负责标记对象,不会被调度器抢占;

- gcMarkWorkerFractionalMode — 当垃圾收集的后台 CPU 使用率达不到预期时(默认为 25%),启动该类型的工作协程帮助垃圾收集达到利用率的目标,因为它只占用同一个 CPU 的部分资源,所以可以被调度;

- gcMarkWorkerIdleMode — 当处理器没有可以执行的 Goroutine 时,它会运行垃圾收集的标记任务直到被抢占;

runtime.gcControllerState.startCycle 会根据全局处理器的个数以及垃圾收集的 CPU 利用率计算出 dedicatedMarkWorkersNeeded 和 fractionalUtilizationGoal 以决定不同模式的工作协程的数量。

因为后台标记任务的 CPU 利用率为 25%,如果主机是 4 核或者 8 核,那么垃圾收集需要 1 个或者 2 个专门处理相关任务的 Goroutine;不过如果主机是 3 核或者 6 核,因为无法被 4 整除,所以这时需要 0 个或者 1 个专门处理垃圾收集的 Goroutine,运行时需要占用某个 CPU 的部分时间,使用 gcMarkWorkerFractionalMode 模式的协程保证 CPU 的利用率。

正常情况下gc的CPU占用会被约束在25%,超过25%的话,应用协程经常被征用去做mark assist,应用延迟会变高

三种不同模式的工作协程会相互协同保证垃圾收集的 CPU 利用率达到期望的阈值,在到达目标堆大小前完成标记任务。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

|

// startCycle resets the GC controller's state and computes estimates

// for a new GC cycle. The caller must hold worldsema.

func (c *gcControllerState) startCycle() {

c.scanWork = 0

c.bgScanCredit = 0

c.assistTime = 0

c.dedicatedMarkTime = 0

c.fractionalMarkTime = 0

c.idleMarkTime = 0

// 确保next_gc和heap_live之间最少有1MB

// Ensure that the heap goal is at least a little larger than

// the current live heap size. This may not be the case if GC

// start is delayed or if the allocation that pushed heap_live

// over gc_trigger is large or if the trigger is really close to

// GOGC. Assist is proportional to this distance, so enforce a

// minimum distance, even if it means going over the GOGC goal

// by a tiny bit.

if memstats.next_gc < memstats.heap_live+1024*1024 {

memstats.next_gc = memstats.heap_live + 1024*1024

}

// 计算可以同时执行的后台标记任务的数量

// dedicatedMarkWorkersNeeded等于P的数量的25%去除小数点

// 如果可以整除则fractionalMarkWorkersNeeded等于0否则等于1

// totalUtilizationGoal是GC所占的P的目标值(例如P一共有5个时目标是1.25个P)

// fractionalUtilizationGoal是Fractiona模式的任务所占的P的目标值(例如P一共有5个时目标是0.25个P)

// Compute the background mark utilization goal. In general,

// this may not come out exactly. We round the number of

// dedicated workers so that the utilization is closest to

// 25%. For small GOMAXPROCS, this would introduce too much

// error, so we add fractional workers in that case.

totalUtilizationGoal := float64(gomaxprocs) * gcBackgroundUtilization

c.dedicatedMarkWorkersNeeded = int64(totalUtilizationGoal + 0.5)

utilError := float64(c.dedicatedMarkWorkersNeeded)/totalUtilizationGoal - 1

const maxUtilError = 0.3

if utilError < -maxUtilError || utilError > maxUtilError {

// Rounding put us more than 30% off our goal. With

// gcBackgroundUtilization of 25%, this happens for

// GOMAXPROCS<=3 or GOMAXPROCS=6. Enable fractional

// workers to compensate.

if float64(c.dedicatedMarkWorkersNeeded) > totalUtilizationGoal {

// Too many dedicated workers.

c.dedicatedMarkWorkersNeeded--

}

c.fractionalUtilizationGoal = (totalUtilizationGoal - float64(c.dedicatedMarkWorkersNeeded)) / float64(gomaxprocs)

} else {

c.fractionalUtilizationGoal = 0

}

// In STW mode, we just want dedicated workers.

if debug.gcstoptheworld > 0 {

c.dedicatedMarkWorkersNeeded = int64(gomaxprocs)

c.fractionalUtilizationGoal = 0

}

// 重置P中的辅助GC所用的时间统计

// Clear per-P state

for _, p := range allp {

p.gcAssistTime = 0

p.gcFractionalMarkTime = 0

}

// 计算辅助GC的参数

// 参考上面对计算assistWorkPerByte的公式的分析

// Compute initial values for controls that are updated

// throughout the cycle.

c.revise()

if debug.gcpacertrace > 0 {

print("pacer: assist ratio=", c.assistWorkPerByte,

" (scan ", memstats.heap_scan>>20, " MB in ",

work.initialHeapLive>>20, "->",

memstats.next_gc>>20, " MB)",

" workers=", c.dedicatedMarkWorkersNeeded,

"+", c.fractionalUtilizationGoal, "\n")

}

}

|

setGCPhase

setGCPhase函数会修改表示当前GC阶段的全局变量和是否开启写屏障的全局变量:

1

2

3

4

5

6

|

//go:nosplit

func setGCPhase(x uint32) {

atomic.Store(&gcphase, x)

writeBarrier.needed = gcphase == _GCmark || gcphase == _GCmarktermination

writeBarrier.enabled = writeBarrier.needed || writeBarrier.cgo

}

|

写屏障是保证 Go 语言并发标记安全不可或缺的技术,我们需要使用混合写屏障维护对象图的弱三色不变性,然而写屏障的实现需要编译器和运行时的共同协作。在 SSA 中间代码生成阶段,编译器会使用 cmd/compile/internal/ssa.writebarrier 函数在 Store、Move 和 Zero 操作中加入写屏障,生成如下所示的代码:

1

2

3

4

5

|

if writeBarrier.enabled {

gcWriteBarrier(ptr, val)

} else {

*ptr = val

}

|

当 Go 语言进入垃圾收集阶段时,全局变量 runtime.writeBarrier 中的 enabled 字段会被置成开启,所有的写操作都会调用 runtime.gcWriteBarrier:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

TEXT runtime·gcWriteBarrier(SB),NOSPLIT,$28

...

get_tls(BX)

MOVL g(BX), BX

MOVL g_m(BX), BX

MOVL m_p(BX), BX

MOVL (p_wbBuf+wbBuf_next)(BX), CX

LEAL 8(CX), CX

MOVL CX, (p_wbBuf+wbBuf_next)(BX)

CMPL CX, (p_wbBuf+wbBuf_end)(BX)

MOVL AX, -8(CX) // 记录值

MOVL (DI), BX

MOVL BX, -4(CX) // 记录 *slot

JEQ flush

ret:

MOVL 20(SP), CX

MOVL 24(SP), BX

MOVL AX, (DI) // 触发写操作

RET

flush:

...

CALL runtime·wbBufFlush(SB)

...

JMP ret

|

在上述汇编函数中,DI 寄存器是写操作的目的地址,AX 寄存器中存储了被覆盖的值,该函数会覆盖原来的值并通过 runtime.wbBufFlush 通知垃圾收集器将原值和新值加入当前处理器的工作队列,因为该写屏障的实现比较复杂,所以写屏障对程序的性能还是有比较大的影响,之前只需要一条指令完成的工作,现在需要几十条指令。

我们在上面提到过 Dijkstra 和 Yuasa 写屏障组成的混合写屏障在开启后,所有新创建的对象都需要被直接涂成黑色,这里的标记过程是由 runtime.gcmarknewobject 完成的:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

if gcphase != _GCoff {

gcmarknewobject(uintptr(x), size, scanSize)

}

...

}

func gcmarknewobject(obj, size, scanSize uintptr) {

markBitsForAddr(obj).setMarked()

gcw := &getg().m.p.ptr().gcw

gcw.bytesMarked += uint64(size)

gcw.scanWork += int64(scanSize)

}

|

runtime.mallocgc 会在垃圾收集开始后调用该函数,获取对象对应的内存单元以及标记位 runtime.markBits 并调用 runtime.markBits.setMarked 直接将新的对象涂成黑色。

gcBgMarkPrepare

gcBgMarkPrepare函数会重置后台标记任务的计数:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

// gcBgMarkPrepare sets up state for background marking.

// Mutator assists must not yet be enabled.

func gcBgMarkPrepare() {

// Background marking will stop when the work queues are empty

// and there are no more workers (note that, since this is

// concurrent, this may be a transient state, but mark

// termination will clean it up). Between background workers

// and assists, we don't really know how many workers there

// will be, so we pretend to have an arbitrarily large number

// of workers, almost all of which are "waiting". While a

// worker is working it decrements nwait. If nproc == nwait,

// there are no workers.

work.nproc = ^uint32(0)

work.nwait = ^uint32(0)

}

|

gcMarkRootPrepare

gcMarkRootPrepare函数会计算扫描根对象的任务数量:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

|

// gcMarkRootPrepare queues root scanning jobs (stacks, globals, and

// some miscellany) and initializes scanning-related state.

//

// The world must be stopped.

func gcMarkRootPrepare() {

work.nFlushCacheRoots = 0

// 计算block数量的函数, rootBlockBytes是256KB

// Compute how many data and BSS root blocks there are.

nBlocks := func(bytes uintptr) int {

return int(divRoundUp(bytes, rootBlockBytes))

}

work.nDataRoots = 0

work.nBSSRoots = 0

// Scan globals.

// 计算扫描可读写的全局变量的任务数量

for _, datap := range activeModules() {

nDataRoots := nBlocks(datap.edata - datap.data)

if nDataRoots > work.nDataRoots {

work.nDataRoots = nDataRoots

}

}

// 计算扫描只读的全局变量的任务数量

for _, datap := range activeModules() {

nBSSRoots := nBlocks(datap.ebss - datap.bss)

if nBSSRoots > work.nBSSRoots {

work.nBSSRoots = nBSSRoots

}

}

// Scan span roots for finalizer specials.

//

// We depend on addfinalizer to mark objects that get

// finalizers after root marking.

if go115NewMarkrootSpans {

// We're going to scan the whole heap (that was available at the time the

// mark phase started, i.e. markArenas) for in-use spans which have specials.

//

// Break up the work into arenas, and further into chunks.

//

// Snapshot allArenas as markArenas. This snapshot is safe because allArenas

// is append-only.

mheap_.markArenas = mheap_.allArenas[:len(mheap_.allArenas):len(mheap_.allArenas)]

work.nSpanRoots = len(mheap_.markArenas) * (pagesPerArena / pagesPerSpanRoot)

} else {

// We're only interested in scanning the in-use spans,

// which will all be swept at this point. More spans

// may be added to this list during concurrent GC, but

// we only care about spans that were allocated before

// this mark phase.

work.nSpanRoots = mheap_.sweepSpans[mheap_.sweepgen/2%2].numBlocks()

}

// Scan stacks.

//

// Gs may be created after this point, but it's okay that we

// ignore them because they begin life without any roots, so

// there's nothing to scan, and any roots they create during

// the concurrent phase will be scanned during mark

// termination.

work.nStackRoots = int(atomic.Loaduintptr(&allglen))

// 计算总任务数量

// 后台标记任务会对markrootNext进行原子递增, 来决定做哪个任务

// 这种用数值来实现锁自由队列的办法挺聪明的, 尽管google工程师觉得不好(看后面markroot函数的分析)

work.markrootNext = 0

work.markrootJobs = uint32(fixedRootCount + work.nFlushCacheRoots + work.nDataRoots + work.nBSSRoots + work.nSpanRoots + work.nStackRoots)

}

|

gcMarkTinyAllocs

gcMarkTinyAllocs函数会标记所有tiny alloc等待合并的对象:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

// gcMarkTinyAllocs greys all active tiny alloc blocks.

//

// The world must be stopped.

func gcMarkTinyAllocs() {

for _, p := range allp {

c := p.mcache

if c == nil || c.tiny == 0 {

continue

}

// 标记各个P中的mcache中的tiny

// 在上面的mallocgc函数中可以看到tiny是当前等待合并的对象

_, span, objIndex := findObject(c.tiny, 0, 0)

gcw := &p.gcw

// 标记一个对象存活, 并把它加到标记队列(该对象变为灰色)

greyobject(c.tiny, 0, 0, span, gcw, objIndex)

}

}

|

startTheWorldWithSema

startTheWorldWithSema函数会重新启动世界:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

|

func startTheWorldWithSema(emitTraceEvent bool) int64 {

mp := acquirem() // disable preemption because it can be holding p in a local var

// 调用 runtime.netpoll 从网络轮询器中获取待处理的任务并加入全局队列;

// 判断收到的网络事件(fd可读可写或错误)并添加对应的G到待运行队列

if netpollinited() {

list := netpoll(0) // non-blocking

injectglist(&list)

}

lock(&sched.lock)

// 如果要求改变gomaxprocs则调整P的数量

// procresize会返回有可运行任务的P的链表

procs := gomaxprocs

if newprocs != 0 {

procs = newprocs

newprocs = 0

}

//调用 runtime.procresize 扩容或者缩容全局的处理器;

p1 := procresize(procs)

// 取消GC等待标记

sched.gcwaiting = 0

// 如果sysmon在等待则唤醒它

if sched.sysmonwait != 0 {

sched.sysmonwait = 0

notewakeup(&sched.sysmonnote)

}

unlock(&sched.lock)

// 唤醒有可运行任务的P

//调用 runtime.notewakeup 或者 runtime.newm 依次唤醒处理器或者为处理器创建新的线程;

for p1 != nil {

p := p1

p1 = p1.link.ptr()

if p.m != 0 {

mp := p.m.ptr()

p.m = 0

if mp.nextp != 0 {

throw("startTheWorld: inconsistent mp->nextp")

}

mp.nextp.set(p)

notewakeup(&mp.park)

} else {

// Start M to run P. Do not start another M below.

newm(nil, p, -1)

}

}

// Capture start-the-world time before doing clean-up tasks.

startTime := nanotime()

if emitTraceEvent {

traceGCSTWDone()

}

// Wakeup an additional proc in case we have excessive runnable goroutines

// in local queues or in the global queue. If we don't, the proc will park itself.

// If we have lots of excessive work, resetspinning will unpark additional procs as necessary.

//如果当前待处理的 Goroutine 数量过多,创建额外的处理器辅助完成任务;

wakep()

releasem(mp)

return startTime

}

// Tries to add one more P to execute G's.

// Called when a G is made runnable (newproc, ready).

func wakep() {

if atomic.Load(&sched.npidle) == 0 {

return

}

// be conservative about spinning threads

if atomic.Load(&sched.nmspinning) != 0 || !atomic.Cas(&sched.nmspinning, 0, 1) {

return

}

startm(nil, true)

}

|

重启世界后各个M会重新开始调度, 调度时会优先使用findRunnableGCWorker函数查找任务, 之后就有大约25%的P运行后台标记任务.

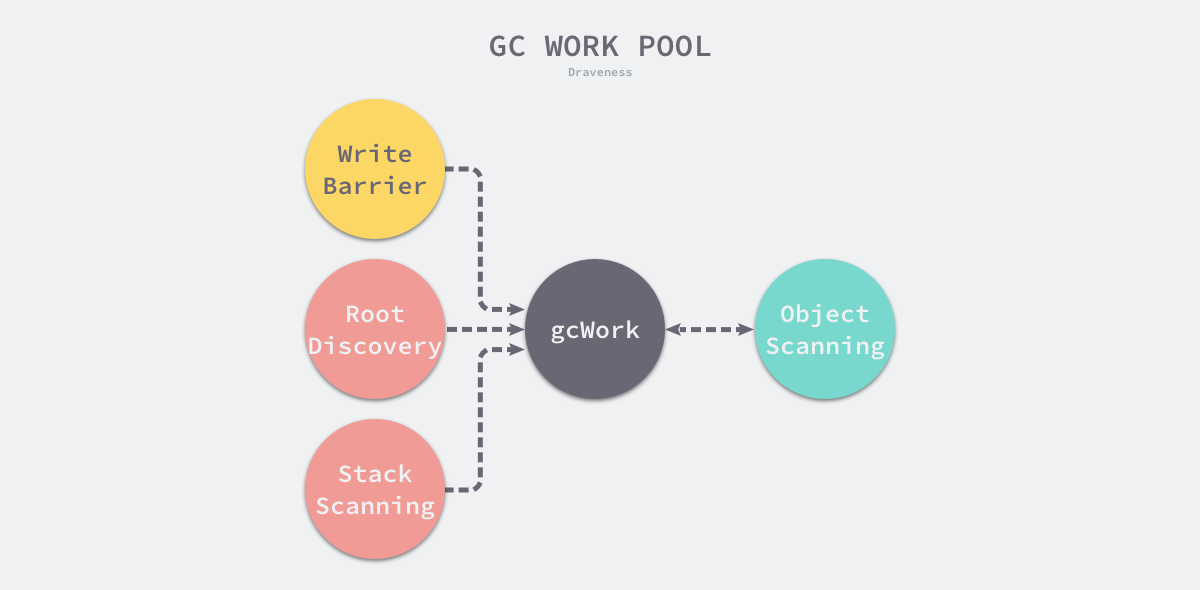

并发扫描与标记辅助

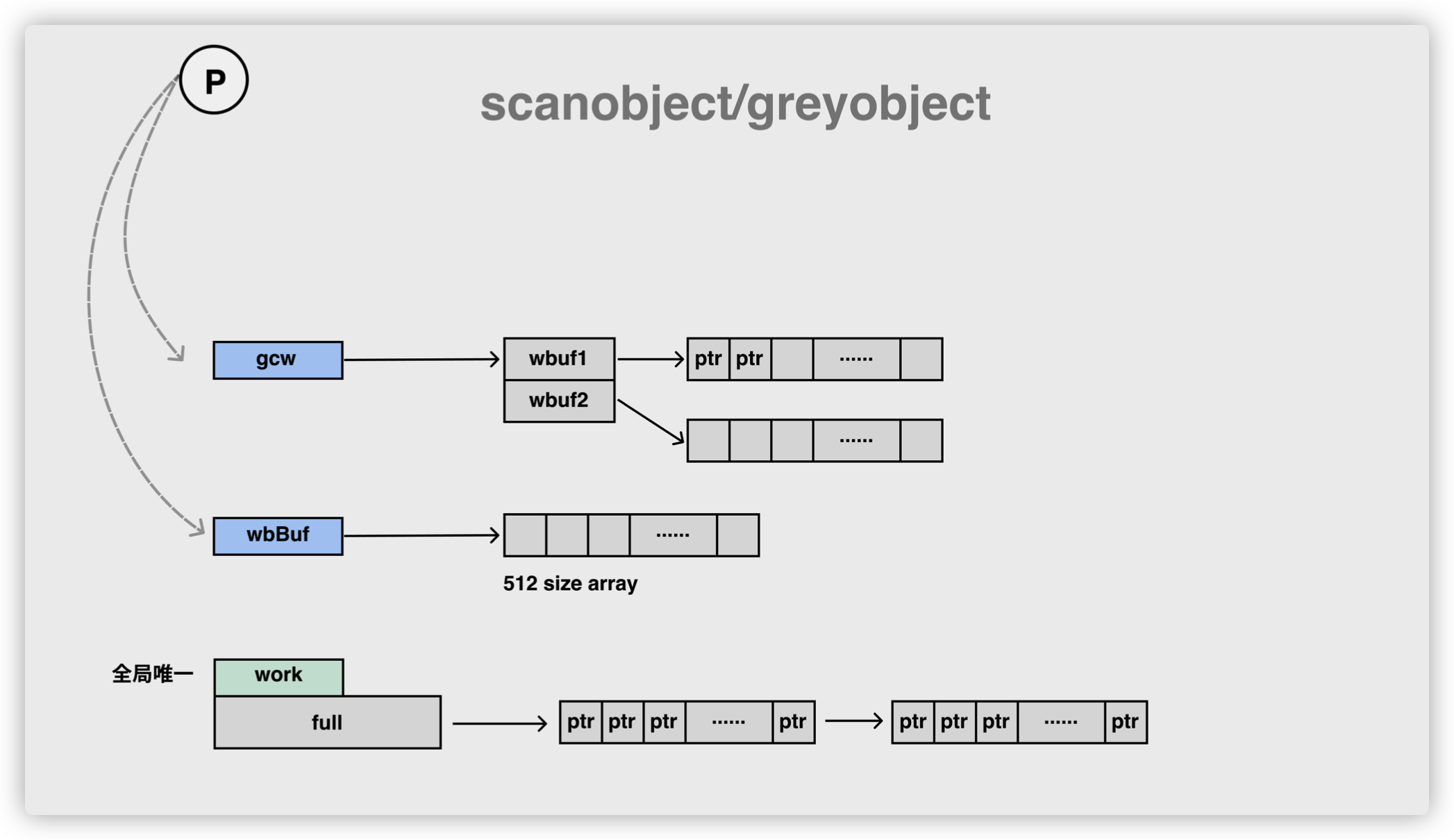

标记对象从哪里来?

- gcMarkWorker

- Markassist

- mutatorwrite/deleteheappointers

标记对象到哪里去?

- Workbuffer

- 本地workbuffer=>p.gcw

- 全局workbuffer=>runtime.work.full • Write barrier buffer

- 本地writebarrierbuffer=>p.wbBuf

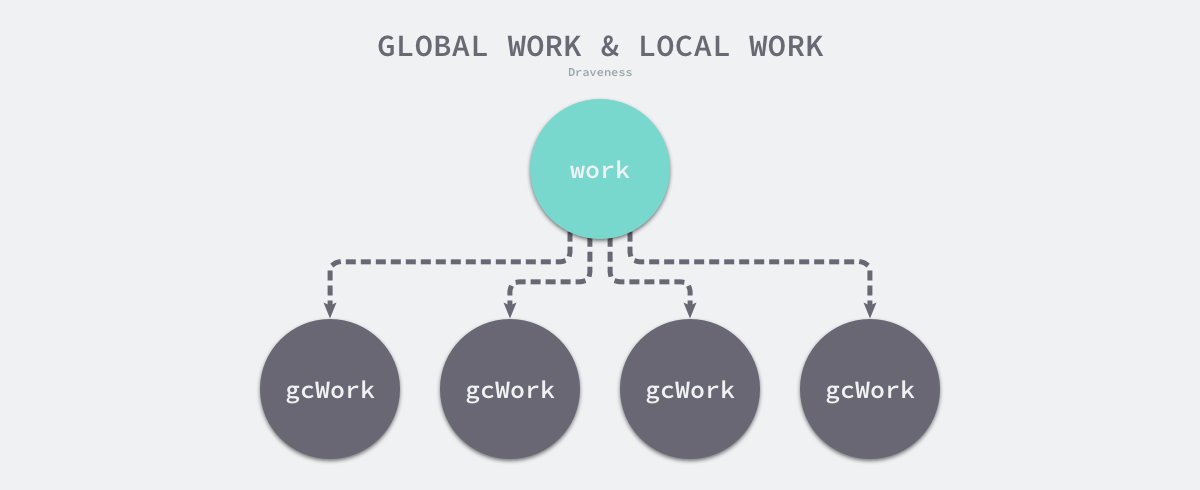

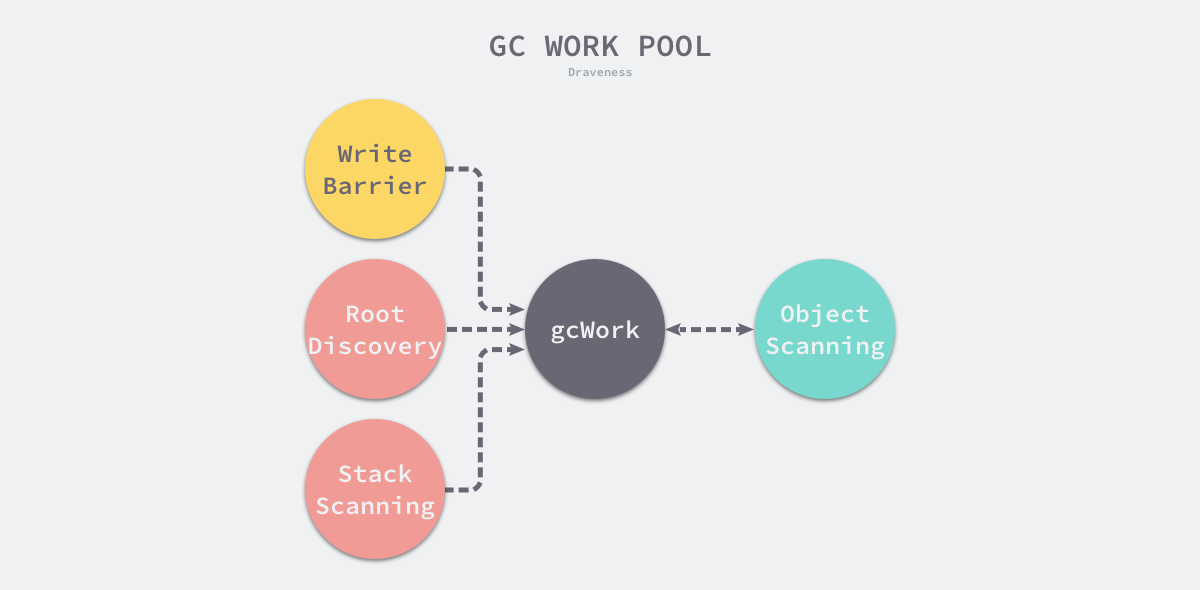

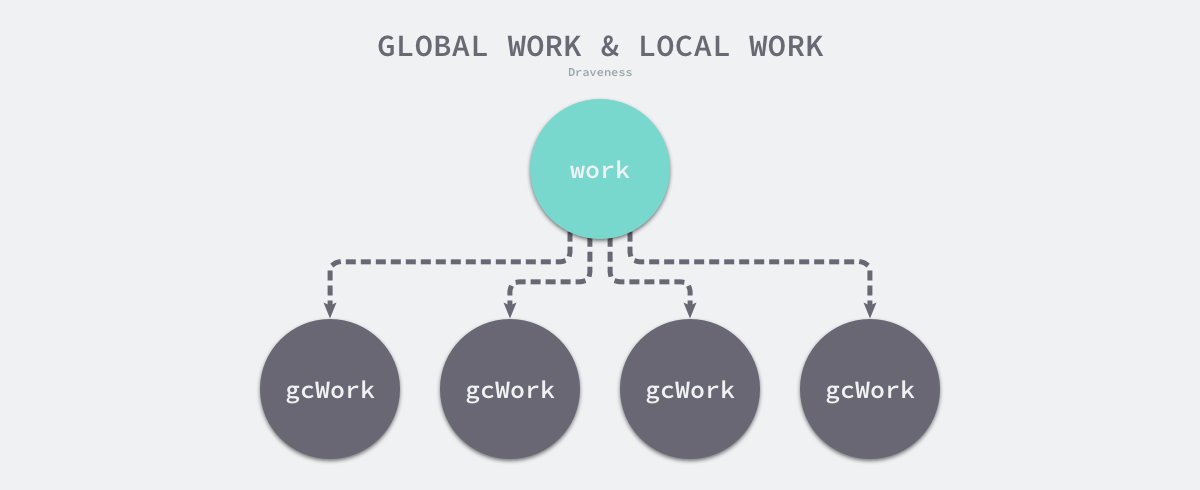

在调用 runtime.gcDrain 函数时,运行时会传入处理器上的 runtime.gcWork,这个结构体是垃圾收集器中工作池的抽象,它实现了一个生产者和消费者的模型,我们可以以该结构体为起点从整体理解标记工作:

写屏障、根对象扫描和栈扫描都会向工作池中增加额外的灰色对象等待处理,而对象的扫描过程会将灰色对象标记成黑色,同时也可能发现新的灰色对象,当工作队列中不包含灰色对象时,整个扫描过程就会结束。

为了减少锁竞争,运行时在每个处理器上会保存独立的待扫描工作,然而这会遇到与调度器一样的问题 — 不同处理器的资源不平均,导致部分处理器无事可做,调度器引入了工作窃取来解决这个问题,垃圾收集器也使用了差不多的机制平衡不同处理器上的待处理任务。

runtime.gcWork.balance 方法会将处理器本地一部分工作放回全局队列中,让其他的处理器处理,保证不同处理器负载的平衡。

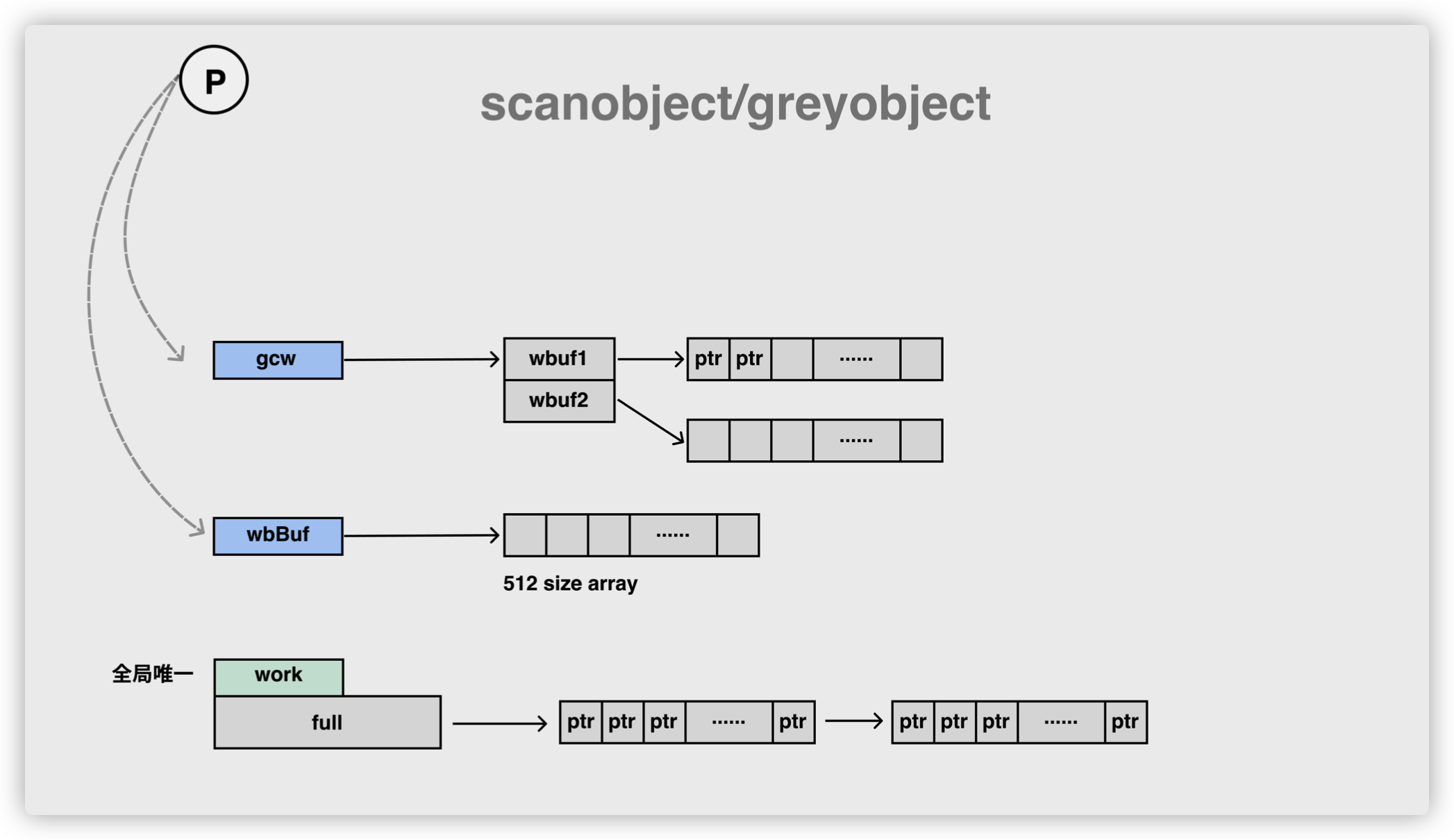

runtime.gcWork 为垃圾收集器提供了生产和消费任务的抽象,该结构体持有了两个重要的工作缓冲区 wbuf1 和 wbuf2,这两个缓冲区分别是主缓冲区和备缓冲区:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

type gcWork struct {

wbuf1, wbuf2 *workbuf

...

}

type workbufhdr struct {

node lfnode // must be first

nobj int

}

type workbuf struct {

workbufhdr

obj [(_WorkbufSize - unsafe.Sizeof(workbufhdr{})) / sys.PtrSize]uintptr

}

|

当我们向该结构体中增加或者删除对象时,它总会先操作主缓冲区,一旦主缓冲区空间不足或者没有对象,就会触发主备缓冲区的切换;而当两个缓冲区空间都不足或者都为空时,会从全局的工作缓冲区中插入或者获取对象,该结构体相关方法的实现都非常简单,这里就不展开分析了。

运行时会使用 runtime.gcDrain 函数扫描工作缓冲区中的灰色对象,它会根据传入 gcDrainFlags 的不同选择不同的策略:

gcDrain 这个函数就是从队列里不断获取,处理这些对象,最重要的一个就是调用 scanobject 继续扫描对象。

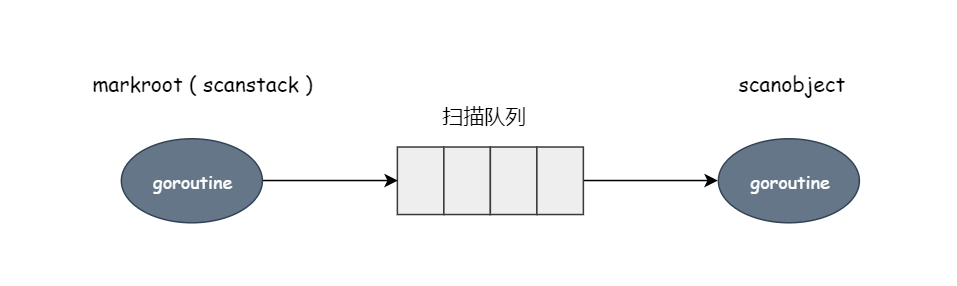

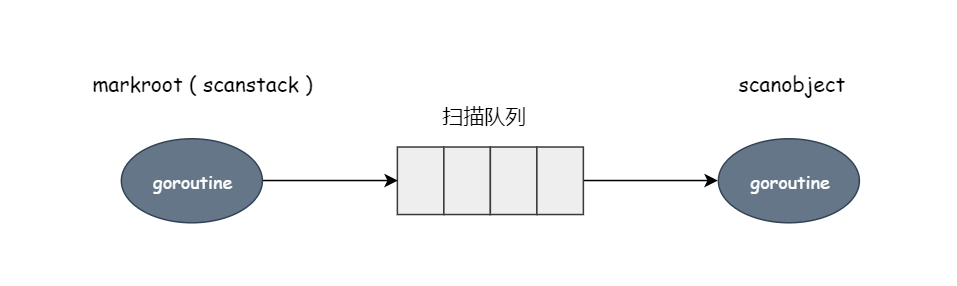

markroot 从根(栈)扫描,把扫描到的对象投入扫描队列。gcDrain 等函数从里面不断获取,不断处理,并且扫描这些对象,进一步挖掘引用关系,当扫描结束之后,那些没有扫描到的就是垃圾了。

findRunnableGCWorker

调度器在调度循环 runtime.schedule 中可以通过垃圾收集控制器的 findRunnableGCWorker 方法获取并执行用于后台标记的任务。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

// One round of scheduler: find a runnable goroutine and execute it.

// Never returns.

// 执行一轮调度器的工作:找到一个 runnable 的 goroutine,并且执行它

// 永不返回

func schedule() {

...

top:

//如果GC处于等待状态,停止M,等待GC完成被唤醒

//准备进入GC STW,休眠

//判断是否有串行运行时任务正在等待执行,判断依据就是调度器的gcwaiting字段是否为0。如果

//gcwaiting不为0,则停止并阻塞当前M直到串行运行时任务结束,才继续执行后面的调度动作。

//串行运行时任务执行时需要停止Go的调度器,官方称此操作为Stop the world,简称STW。

if sched.gcwaiting != 0 {

gcstopm()

goto top

}

if _g_.m.p.ptr().runSafePointFn != 0 {

runSafePointFn()

}

//接下来就是寻找可运行G的过程。首先试图获取执行踪迹读取任务的G。

var gp *g

//当从P.next提取G时,inheritTime = true

//不累加P.schedtick计数,使得它延长本地队列处理时间

var inheritTime bool

if trace.enabled || trace.shutdown {

gp = traceReader()

if gp != nil {

casgstatus(gp, _Gwaiting, _Grunnable)

traceGoUnpark(gp, 0)

}

}

//未果,试图获取执行GC标记任务的G。

//进入GC MarkWorker工作模式

if gp == nil && gcBlackenEnabled != 0 {

gp = gcController.findRunnableGCWorker(_g_.m.p.ptr())

}

...

}

|

垃圾收集控制器会在 runtime.gcControllerState.findRunnableGCWorker 方法中设置处理器的 gcMarkWorkerMode:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

|

// findRunnableGCWorker returns the background mark worker for _p_ if it

// should be run. This must only be called when gcBlackenEnabled != 0.

func (c *gcControllerState) findRunnableGCWorker(_p_ *p) *g {

if gcBlackenEnabled == 0 {

throw("gcControllerState.findRunnable: blackening not enabled")

}

if _p_.gcBgMarkWorker == 0 {

// The mark worker associated with this P is blocked

// performing a mark transition. We can't run it

// because it may be on some other run or wait queue.

return nil

}

if !gcMarkWorkAvailable(_p_) {

// No work to be done right now. This can happen at

// the end of the mark phase when there are still

// assists tapering off. Don't bother running a worker

// now because it'll just return immediately.

return nil

}

// 原子减少对应的值, 如果减少后大于等于0则返回true, 否则返回false

decIfPositive := func(ptr *int64) bool {

if *ptr > 0 {

if atomic.Xaddint64(ptr, -1) >= 0 {

return true

}

// We lost a race

atomic.Xaddint64(ptr, +1)

}

return false

}

// 减少dedicatedMarkWorkersNeeded, 成功时后台标记任务的模式是Dedicated

// dedicatedMarkWorkersNeeded是当前P的数量的25%去除小数点

// 详见startCycle函数

if decIfPositive(&c.dedicatedMarkWorkersNeeded) {

// This P is now dedicated to marking until the end of

// the concurrent mark phase.

_p_.gcMarkWorkerMode = gcMarkWorkerDedicatedMode

} else if c.fractionalUtilizationGoal == 0 {

// No need for fractional workers.

return nil

} else {

// Is this P behind on the fractional utilization

// goal?

//

// This should be kept in sync with pollFractionalWorkerExit.

// 减少fractionalMarkWorkersNeeded, 成功是后台标记任务的模式是Fractional

// 上面的计算如果小数点后有数值(不能够整除)则fractionalMarkWorkersNeeded为1, 否则为0

// 详见startCycle函数

// 举例来说, 4个P时会执行1个Dedicated模式的任务, 5个P时会执行1个Dedicated模式和1个Fractional模式的任务

delta := nanotime() - gcController.markStartTime

if delta > 0 && float64(_p_.gcFractionalMarkTime)/float64(delta) > c.fractionalUtilizationGoal {

// Nope. No need to run a fractional worker.

return nil

}

// Run a fractional worker.

_p_.gcMarkWorkerMode = gcMarkWorkerFractionalMode

}

// 安排后台标记任务执行

// Run the background mark worker

gp := _p_.gcBgMarkWorker.ptr()

casgstatus(gp, _Gwaiting, _Grunnable)

if trace.enabled {

traceGoUnpark(gp, 0)

}

return gp

}

|

控制器通过 dedicatedMarkWorkersNeeded 决定专门执行标记任务的 Goroutine 数量并根据执行标记任务的时间和总时间决定是否启动 gcMarkWorkerFractionalMode 模式的 Goroutine.

findrunnable

除了这两种控制器要求的工作协程之外,调度器还会在 findrunnable 函数中利用空闲的处理器执行垃圾收集以加速该过程:

当垃圾收集处于标记阶段并且当前处理器不需要做任何任务时,runtime.findrunnable 函数会在当前处理器上执行该 Goroutine 辅助并发的对象标记:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

|

// Finds a runnable goroutine to execute.

// Tries to steal from other P's, get g from global queue, poll network.

// 寻找一个可运行的 Goroutine 来执行。

// 尝试从其他的 P 偷取、从全局队列中获取、poll 网络

func findrunnable() (gp *g, inheritTime bool) {

top:

//1. 获取执行GC标记任务的G。如果恰巧正处于GC标记阶段,且本地P可用于GC标记任务。

//那么调度器会把本地P持有的GC标记专用G置为Grunnable状态并返回这个G。

//检查GC MarkWorker

// We have nothing to do. If we're in the GC mark phase, can

// safely scan and blacken objects, and have work to do, run

// idle-time marking rather than give up the P.

if gcBlackenEnabled != 0 && _p_.gcBgMarkWorker != 0 && gcMarkWorkAvailable(_p_) {

_p_.gcMarkWorkerMode = gcMarkWorkerIdleMode

gp := _p_.gcBgMarkWorker.ptr() //获取用于GC标记的专用G

casgstatus(gp, _Gwaiting, _Grunnable)//将gp并发安全的从Gwaiting状态转为Grunnable状态

if trace.enabled {

traceGoUnpark(gp, 0)

}

return gp, false

}

...

//4. 再次获取执行GC标记任务的G。如果正好处于GC标记阶段,且GC标记任务相关的全局资源可用。调度器就从空闲P列表中取出一个P,如果这个P持有GC标记专用G,就将该P与当前M关联,并从第二阶段开始继续执行。否则该P会被重新放回空闲P列表。

// Check for idle-priority GC work again.

if gcBlackenEnabled != 0 && gcMarkWorkAvailable(nil) {

lock(&sched.lock)

_p_ = pidleget()

if _p_ != nil && _p_.gcBgMarkWorker == 0 {

pidleput(_p_)

_p_ = nil

}

unlock(&sched.lock)

if _p_ != nil {

acquirep(_p_)

if wasSpinning {

_g_.m.spinning = true

atomic.Xadd(&sched.nmspinning, 1)

}

// Go back to idle GC check.

goto stop

}

}

...

goto top

}

func gcMarkWorkAvailable(p *p) bool {

if p != nil && !p.gcw.empty() {

return true

}

if !work.full.empty() {

return true // global work available

}

if work.markrootNext < work.markrootJobs {

return true // root scan work available

}

return false

}

|

gcBgMarkWorker

runtime.gcBgMarkWorker 是后台的标记任务执行的函数,该函数的循环中执行了对内存中对象图的扫描和标记,我们分三个部分介绍该函数的实现原理:

- 获取当前处理器以及 Goroutine 打包成 parkInfo 类型的结构体并主动陷入休眠等待唤醒;

- 根据处理器上的 gcMarkWorkerMode 模式决定扫描任务的策略;

- 所有标记任务都完成后,调用 runtime.gcMarkDone 方法完成标记阶段;

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24